🔌 Top 10 Ultimate Embedded Systems Projects for 2025: From Microcontrollers to Smart Innovation

Why Embedded Systems Projects Matter in 2025: A Deep Dive

Embedded systems are the silent backbone of modern tech—powering everything from smartwatches to industrial robots. In 2025, demand is booming for engineers who can design efficient, low-level systems that interact with the physical world. Whether you're building for IoT, automotive, aerospace, or consumer electronics, embedded projects are your ticket to a future-proof skill set.

If you're aiming to crack core jobs, dominate embedded interviews, or stand out in the sea of resumes, these 10 cutting-edge projects will give your portfolio the power it needs.

The Unseen Powerhouse: Why Embedded Systems are More Critical Than Ever

In 2025, the pervasive nature of technology means embedded systems are no longer just components; they are the intelligent core of countless innovations. Their importance is driven by several converging trends:

1. Explosive Growth of IoT (Internet of Things): From smart homes and connected cities to industrial IoT (IIoT) and smart agriculture, virtually every "thing" that connects to the internet relies on an embedded system. These devices need to be low-power, efficient, secure, and capable of real-time processing, all of which fall squarely within the domain of embedded systems engineering. Project experience in IoT, especially with edge computing and sensor integration, is highly sought after.

2. The Rise of AI at the Edge: As AI moves beyond the cloud, embedded systems are becoming crucial platforms for deploying machine learning models directly on devices. This "AI at the edge" enables faster decision-making, reduced latency, enhanced privacy, and lower bandwidth consumption. Projects demonstrating AI/ML integration on microcontrollers or embedded Linux platforms are incredibly valuable.

3. Automotive and Autonomous Vehicles: The automotive industry is undergoing a massive transformation, with embedded systems at its heart. Modern vehicles contain hundreds of embedded control units (ECUs) managing everything from engine performance and infotainment to advanced driver-assistance systems (ADAS) and fully autonomous driving. Expertise in automotive-grade embedded systems, functional safety (ISO 26262), and real-time operating systems (RTOS) is paramount.

4. Industrial Automation and Robotics (Industry 4.0): The push towards Industry 4.0 demands intelligent, interconnected machinery. Embedded systems are the brains behind industrial robots, automated assembly lines, predictive maintenance sensors, and sophisticated control systems. Projects involving industrial communication protocols (e.g., Modbus, EtherCAT), motor control, and sensor fusion are highly relevant.

5. Miniaturization and Power Efficiency: Consumer demand for smaller, more powerful, and longer-lasting devices (wearables, smartphones, medical implants) constantly pushes the boundaries of embedded system design. Engineers proficient in ultra-low-power design, battery management, and optimizing code for constrained environments are in high demand.

6. Security and Reliability: With more devices connected and controlling critical functions, the security and reliability of embedded systems are non-negotiable. Designing systems resistant to cyber threats, ensuring data integrity, and implementing robust error handling are vital skills that embedded projects can showcase.

The Unrivaled Benefits of Embedded Systems Projects for Your Career

Engaging in embedded systems projects in 2025 offers concrete advantages for aspiring and experienced engineers alike:

- Mastery of Core Engineering Principles: Embedded projects force you to grapple with fundamental concepts like microcontroller architectures, digital logic, analog circuits, data structures, algorithms, and real-time constraints. This deep technical understanding is invaluable for any engineering discipline.

- Hands-on Hardware-Software Integration: Unlike purely software or hardware roles, embedded systems demand a holistic understanding of how code interacts with physical components. This practical experience is highly valued by employers.

- Problem-Solving Prowess: Debugging embedded systems often requires creative problem-solving, combining knowledge of electronics, programming, and system-level thinking. This hones critical analytical skills.

- Showcasing Practical Skills over Theory: While academic knowledge is important, practical projects demonstrate your ability to apply theory to real-world challenges, making you a more attractive candidate.

- Building a Tangible Portfolio: A well-documented embedded project provides a concrete example of your skills and dedication, setting you apart from candidates with only theoretical knowledge or generic software projects.

- Gateway to Niche and High-Demand Fields: Embedded systems skills open doors to specialized and well-paying sectors like automotive, aerospace, medical devices, defense, and industrial automation.

- Future-Proofing Your Skill Set: As technology continues to evolve, the fundamental principles of embedded systems design remain constant. The ability to work at the hardware-software interface will always be in demand, regardless of specific technologies.

- Cracking Core Jobs and Dominating Interviews: Companies hiring for embedded roles are keenly interested in candidates who have tinkered with microcontrollers, written low-level drivers, or debugged hardware issues. Projects provide perfect talking points and demonstrate genuine interest and capability.

By focusing on cutting-edge embedded projects in 2025, you're not just building devices; you're building a robust, future-proof career path in one of the most critical and exciting fields of modern technology.

Table of Content:

1. Smart Health Monitoring Wearable

2. Gesture-Controlled Smart Home System

3. ESP32-Based Weather Station with Web Dashboard

4. Smart Attendance System with RFID + Cloud Sync

5. Automated Plant Irrigation System

6. Voice-Controlled Robot Using Arduino & Bluetooth

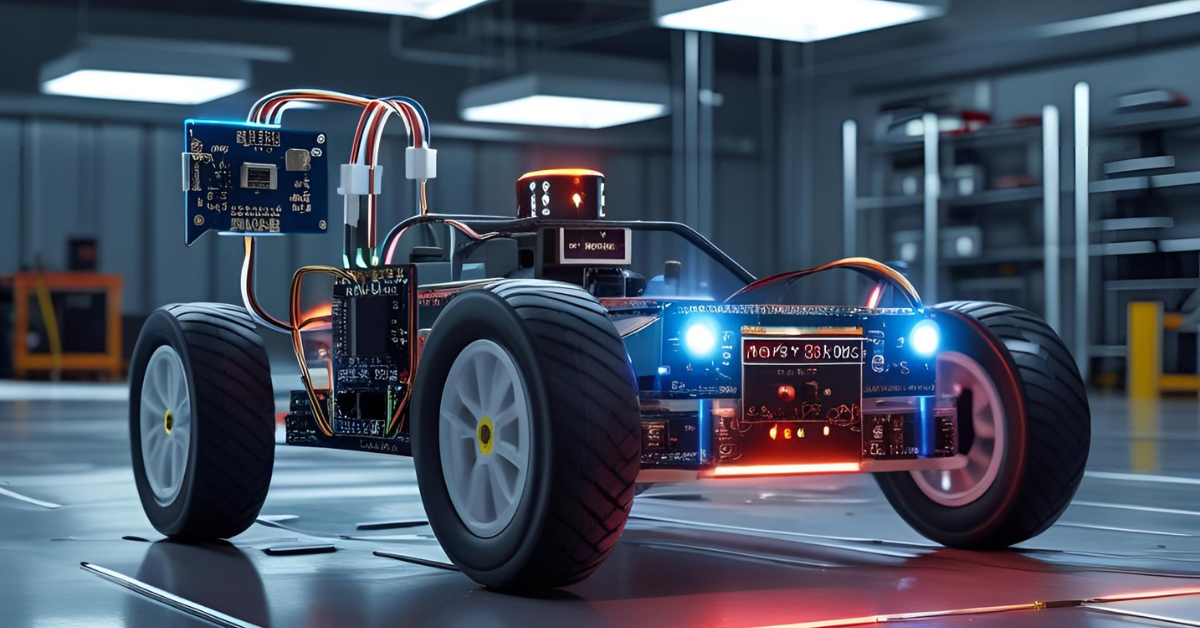

7. Real-Time Object Avoiding Car (Ultrasonic + L298N)

8. Home Security System with GSM & PIR Sensors

9. Industrial Machine Monitoring Unit (Vibration + MQTT)

10. AI-Powered Face Detection System with Raspberry Pi + OpenCV

1. Smart Health Monitoring Wearable

🧠 Tech Stack:

- Microcontroller: Arduino Nano (or ESP32 for advanced features/Wi-Fi)

- Sensors:

- Pulse Sensor (e.g., KY-039 or MAX30102 for heart rate and SpO2)

- Temperature Sensor (e.g., DS18B20 or LM35, optional but recommended for comprehensive monitoring)

- Display: OLED Display (e.g., 0.96" I2C OLED)

- Communication: BLE Module (e.g., HC-05 for basic serial, HM-10 for full BLE, or ESP32's built-in BLE)

- Power: Small LiPo Battery with charging module (e.g., TP4056)

- Enclosure: 3D-printed wristband or compact casing

📦 Project Overview & Concept:

The Smart Health Monitoring Wearable is a miniature, non-invasive device designed to continuously or periodically track essential physiological parameters of the user. The core concept revolves around empowering individuals with real-time insights into their health, enabling proactive health management and providing peace of mind.

The device will be worn on the wrist, finger, or another suitable body part. It will leverage a combination of sensors to acquire vital data. For instance, a pulse sensor will detect heart rate and, if using an advanced sensor like the MAX30102, also measure blood oxygen saturation (SpO2). An optional temperature sensor can provide body temperature readings.

The collected data will be processed by the Arduino Nano (or ESP32), displayed instantly on a compact OLED screen for immediate feedback to the user, and simultaneously transmitted wirelessly via a Bluetooth Low Energy (BLE) module to a connected smartphone application. The mobile app, developed separately, will serve as a dashboard for visualizing trends, logging historical data, setting up custom alerts for abnormal readings, and potentially sharing data with healthcare providers (with user consent).

This project requires careful consideration of power efficiency, sensor accuracy, compact design, and robust wireless communication, making it an excellent demonstration of embedded systems design for wearable technology.

📈 Why Build It: Benefits & Impact

Building a Smart Health Monitoring Wearable in 2025 is highly relevant and offers numerous benefits, both personally and professionally:

- Rising Health Awareness & Remote Monitoring: The global focus on personal health and preventive care has surged. There's a growing demand for devices that enable individuals to monitor their health from home, reducing the need for frequent clinic visits, especially for chronic condition management.

- Market Demand for Wearables: The wearable technology market continues its explosive growth. Gaining experience in this sector positions you at the forefront of consumer electronics and health tech innovation.

- Proactive Health Management: This device facilitates early detection of potential health issues, allowing users to take timely action or seek medical advice, potentially preventing serious conditions.

- Peace of Mind for Users and Caregivers: For individuals with specific health concerns or for families monitoring elderly relatives, the ability to receive real-time alerts for critical vital signs offers significant reassurance.

- Skill Enhancement for Embedded Engineers: This project is a comprehensive learning experience, covering:

- Sensor Interfacing: Working with analog and digital sensors (Pulse, SpO2, Temperature).

- Microcontroller Programming: Efficient data acquisition, processing, and display.

- Low-Power Design: Essential for battery-powered wearables.

- Wireless Communication (BLE): Understanding protocols for data transmission to mobile devices.

- Basic UI/UX: Designing an intuitive display for the wearable.

- Mobile App Integration: (If you extend to building the app) Experience in full-stack IoT development.

- Enclosure Design: Practical experience in mechanical design and manufacturing (e.g., 3D printing).

- Portfolio Differentiator: A functional, well-documented smart health wearable is a powerful project for your resume, showcasing expertise in IoT, health tech, and embedded systems, which are highly sought after by employers in various industries (medical devices, consumer electronics, sports tech).

🏥 Use Cases:

This Smart Health Monitoring Wearable has a wide array of practical applications:

- Personal Fitness & Wellness:

- Fitness Tracking: Monitoring heart rate during exercise, helping users stay within target heart rate zones for optimal workouts.

- Stress Monitoring: Tracking resting heart rate variability as an indicator of stress levels.

- Sleep Tracking: (With advanced algorithms) Monitoring heart rate patterns during sleep to infer sleep stages.

- Elderly Care & Remote Monitoring:

- Fall Detection (with additional accelerometer): Sending alerts to caregivers if a fall is detected.

- Vitals Tracking for Seniors: Remotely monitoring heart rate and SpO2 for elderly individuals, providing reassurance to family members or caregivers.

- Medication Reminders: (Integrated with the mobile app) Sending notifications to the wearable.

- Chronic Disease Management:

- Cardiac Patients: Regular monitoring of heart rate and rhythm for individuals with heart conditions.

- Respiratory Conditions: SpO2 monitoring for patients with asthma, COPD, or other respiratory illnesses.

- Post-Operative Monitoring: Basic vital sign tracking during recovery at home.

- Workplace Safety:

- Monitoring vital signs of workers in hazardous environments (e.g., high-temperature areas, confined spaces) to detect signs of fatigue or distress.

- Sports & Athletics:

- Monitoring athletes' physiological responses during training to optimize performance and prevent overexertion.

- Educational & Hobbyist Tool:

- An excellent platform for learning about sensor integration, embedded programming, and wireless communication.

This project is a perfect blend of hardware, software, and real-world utility, making it an incredibly valuable addition to any embedded systems portfolio.

Project 1: Smart Health Monitoring Wearable Codes:

🔗 View Project Code on GitHubHow to Use and Set Up:

1. Arduino IDE Setup:

- Download and install the Arduino IDE if you haven't already.

- Go to

Tools > Board > Arduino AVR Boardsand selectArduino Nano. - Go to

Tools > Portand select the serial port connected to your Arduino Nano.

2. Install Libraries:

- Open the Arduino IDE.

- Go to

Sketch > Include Library > Manage Libraries.... - Search for and install the following libraries:

Adafruit GFX LibraryAdafruit SSD1306Adafruit MAX30105OneWireDallasTemperature

- The

SoftwareSeriallibrary is typically built into the Arduino IDE, so no extra installation is needed for that.

3. Wiring:

- Follow the detailed wiring guide provided in the comments within the code. Pay special attention to the DS18B20's 4.7K Ohm pull-up resistor and the potential need for a voltage divider for the HC-05's RX pin if your HC-05 is 3.3V and your Arduino is 5V (most HC-05 modules have onboard regulators, but it's good to check).

4. Upload the Code:

- Copy the entire code block into your Arduino IDE.

- Click the "Verify" button (checkmark icon) to compile the code.

- Click the "Upload" button (right arrow icon) to upload the code to your Arduino Nano.

5. Monitor Output:

- Open the Serial Monitor (

Tools > Serial Monitor) with the baud rate set to9600to see debugging information and sensor readings.

6. Bluetooth Connection:

- Once the Arduino is running, the HC-05/HM-10 module should become discoverable by your smartphone.

- Pair your phone with the Bluetooth module. The default PIN for HC-05 is usually

1234or0000. - Use a generic Bluetooth serial terminal app on your smartphone (search "Bluetooth Serial Terminal" on Play Store/App Store). Connect to the HC-05/HM-10 module. You should start receiving the formatted sensor data (e.g.,

HR:75,SpO2:98.5,TempC:36.7\n).

Next Steps and Improvements:

- Accurate SpO2 Calculation: The

Adafruit_MAX30105library provides raw IR/Red data and a basic SpO2 function. For medical-grade accuracy, implementing a more sophisticated SpO2 algorithm (based on Maxim Integrated's application notes or more advanced libraries like SparkFun's MAX30102 library) would be necessary. - Mobile Application Development: Develop a dedicated Android/iOS app to connect to the BLE module, parse the incoming data, display it beautifully (e.g., using charts), log historical data, and set up custom alerts.

- Power Optimization: For a true wearable, implement deeper sleep modes for the Arduino Nano (if using just Nano) or utilize the ESP32's advanced power management features (Light Sleep, Deep Sleep) if you switch to it.

- Data Storage: Implement local data storage on an SD card module if you want to log data without continuous Bluetooth connection.

- Enclosure Design: Refine your 3D-printed enclosure for comfort, durability, and sensor placement for optimal readings.

- Error Handling: Add more robust error handling for sensor failures, Bluetooth connection drops, and invalid readings.

- Calibration: Calibrate your temperature and pulse oximetry readings against known accurate devices.

2. Gesture-Controlled Smart Home System

🧠 Tech Stack:

- Microcontrollers:

- Transmitter Unit: Arduino Uno (or a smaller Pro Mini/Nano for wearable comfort)

- Receiver Unit: Arduino Uno (or NodeMCU/ESP32 for Wi-Fi capabilities if extending)

- Sensors:

- Accelerometer: ADXL345 (3-axis accelerometer for detecting motion and orientation)

- Wireless Communication:

- RF Transmitter/Receiver Module: 433 MHz RF modules (e.g., FS1000A/XY-MK-5V pair for basic on/off, or NRF24L01 for more robust two-way communication). For higher reliability and range, NRF24L01 is highly recommended.

- Actuators/Controlled Devices:

- Relay Module: 1, 2, 4, or 8-channel relay board (to switch AC loads like lights, fans).

- Appliances: Standard household appliances (lights, fans, lamps, small ACs - connect via relays).

- Optional Enhancements:

- Display: Small OLED or LCD for status feedback on the transmitter or receiver.

- Power: 9V battery for the transmitter unit, USB/external power supply for the receiver.

- Enclosures: 3D-printed cases for both the wearable gesture unit and the receiver box.

📦 Project Overview & Concept:

The Gesture-Controlled Smart Home System aims to provide an intuitive and futuristic way to interact with home appliances using simple hand gestures. Imagine a user wearing a small device on their hand or wrist; a flick of the wrist turns on the lights, a hand wave adjusts the fan speed, or a specific motion changes the TV channel.

The system comprises two main parts:

1. The Transmitter Unit (Gesture Module): This is the wearable component, typically built around an Arduino Uno (or smaller form factor) and an ADXL345 accelerometer. The accelerometer continuously reads the motion and orientation of the user's hand. Specific patterns of movement (e.g., an upward flick, a left-to-right swipe, a circular motion) are programmed and mapped to distinct commands. Once a gesture is recognized, the Arduino encodes this command and transmits it wirelessly via the RF transmitter module.

2. The Receiver Unit (Home Control Module): This unit, also typically an Arduino Uno, is connected to the RF receiver module and a relay board. It constantly listens for incoming wireless commands. Upon receiving a command, the Arduino decodes it and, based on the pre-programmed mapping, activates or deactivates the corresponding relay. Each relay is connected to a specific home appliance (e.g., a light bulb, a fan, a socket for a TV).

This project delves into motion sensing, pattern recognition (even simple ones), wireless data transmission, and electrical switching, offering a comprehensive dive into embedded control for home automation.

📈 Why Build It: Benefits & Impact

Building a Gesture-Controlled Smart Home System in 2025 offers compelling advantages:

- Exploration of Human-Computer Interaction (HCI): This project moves beyond traditional buttons and switches, exploring a more natural and expressive form of control. It's an excellent way to experiment with intuitive user interfaces.

- Intersection of UX, Embedded Control, and Wireless Protocols: It perfectly demonstrates how these three critical areas converge:

- User Experience (UX): Designing gestures that are natural, memorable, and efficient.

- Embedded Control: Programming microcontrollers to interpret sensor data and control external hardware.

- Wireless Protocols: Understanding how data is transmitted and received reliably over the air.

- Future of Smart Homes: While voice control is prevalent, gesture control offers an alternative, silent, and often quicker interaction method, especially in environments where voice commands might be impractical or undesirable.

- Skill Development in Key Areas:

- Sensor Data Processing: Reading and interpreting raw accelerometer data, filtering noise, and detecting specific motion patterns.

- Wireless Communication: Implementing robust data encoding and decoding for reliable RF transmission.

- Relay Control: Safely interfacing microcontrollers with AC mains power through relays.

- Algorithm Design: Developing algorithms for gesture recognition.

- System Integration: Bringing together multiple hardware components and software modules to form a cohesive system.

- Portfolio Standout: A gesture-controlled system is visually impressive and demonstrates innovative thinking, making your portfolio memorable to potential employers in IoT, home automation, and human-interface design roles.

- Accessibility Potential: Gesture control can offer an alternative interface for individuals with mobility challenges, contributing to assistive technology solutions.

🏡 Use Cases:

The applications of a Gesture-Controlled Smart Home System extend beyond simple on/off control:

- Home Automation & Convenience:

- Lighting Control: Turning lights on/off, dimming, or changing colors with specific hand movements.

- Fan Speed Control: Gesturing up or down to increase or decrease fan speed.

- Appliance Activation: Turning on/off power to coffee makers, stereos, or other plug-in devices via smart plugs connected to relays.

- Curtain/Blind Control: Opening or closing motorized blinds with a simple gesture.

- Entertainment Systems:

- TV/Media Player Control: Changing channels, adjusting volume, pausing/playing media.

- Gaming: Potentially used as a custom controller for simple interactive games.

- Accessibility Solutions:

- Assisted Living: Providing an alternative control method for individuals with limited mobility who might struggle with small buttons or voice commands.

- Industrial & Robotics Control (Advanced Concept):

- Remote Robot Manipulation: Imagine controlling a robotic arm's movement with hand gestures (requires much higher precision and feedback, but the foundation is similar).

- Warehouse Automation: Triggering specific actions in automated systems with predefined gestures.

- Interactive Displays/Presentations:

- Controlling slides, zooming in/out, or interacting with digital content using hand gestures in a presentation setting.

This project is not just about making things smart; it's about making them interact with us in a more intuitive, natural, and engaging way.

Project 2: Gesture-Controlled Smart Home System Codes:

🔗 View Project Code on GitHub

How to Use and Set Up:

This project requires two separate Arduino boards. You will upload the Transmitter Unit code to one Arduino (e.g., Arduino Nano for the wearable part) and the Receiver Unit code to another Arduino (e.g., Arduino Uno for the home control part).

1. Arduino IDE Setup:

- Download and install the Arduino IDE.

- Go to

Tools > Board > Arduino AVR Boardsand selectArduino Uno(for the receiver) andArduino Nano(for the transmitter, if applicable). - Select the correct

Tools > Portfor each board when uploading.

2. Install Libraries:

- Open the Arduino IDE.

- Go to

Sketch > Include Library > Manage Libraries.... - Search for and install:

RF24by TMRh20Adafruit Unified Sensor(for Transmitter)Adafruit ADXL345(for Transmitter)

- The

SPIandWirelibraries are built-in.

3. Wiring Guide:

Transmitter Unit (Arduino Nano / Uno):

- NRF24L01:

- VCC to 3.3V (important! Use 3.3V, not 5V directly for the module, although dev boards often have regulators)

- GND to GND

- CE to Digital Pin 9

- CSN to Digital Pin 10

- SCK to Digital Pin 13 (SPI Clock)

- MOSI to Digital Pin 11 (SPI Data Out)

- MISO to Digital Pin 12 (SPI Data In)

- ADXL345 (I2C):

- VCC to 5V (or 3.3V if your module supports it)

- GND to GND

- SDA to Analog Pin A4

- SCL to Analog Pin A5

Receiver Unit (Arduino Uno):

- NRF24L01:

- VCC to 3.3V (important!)

- GND to GND

- CE to Digital Pin 9

- CSN to Digital Pin 10

- SCK to Digital Pin 13

- MOSI to Digital Pin 11

- MISO to Digital Pin 12

- Relay Module (e.g., 4-channel relay):

- VCC to 5V (for relay power, can be Arduino's 5V or external)

- GND to GND

- IN1 to Digital Pin 2 (LIGHT_RELAY_PIN)

- IN2 to Digital Pin 3 (FAN_RELAY_PIN)

- IN3 to Digital Pin 4 (AC_RELAY_PIN)

- Connect the NO (Normally Open) and Common terminals of the relays to your AC appliances. Exercise extreme caution when working with AC mains voltage. If unsure, consult a qualified electrician.

4. Upload the Code:

- For Transmitter:

- In the provided code, uncomment the

// Uncomment this block for the TRANSMITTER UNITsection and comment out theReceiver Unitsection. - Upload this code to your Transmitter Arduino.

- In the provided code, uncomment the

- For Receiver:

- In the provided code, uncomment the

// Uncomment this block for the RECEIVER UNITsection and comment out theTransmitter Unitsection. - Upload this code to your Receiver Arduino.

- In the provided code, uncomment the

5. Testing:

- Open the Serial Monitor for both Arduinos (one at a time, or use two instances of the IDE/serial monitor programs if your OS allows).

- Observe the "Transmitter" output when you perform gestures.

- Observe the "Receiver" output and how the relays click in response to the gestures.

Next Steps and Improvements:

- Gesture Recognition Refinement: The current gesture detection is basic (based on peak acceleration in X or Y). You could implement:

- Machine Learning: Train a small ML model (e.g., using TinyML/TensorFlow Lite Micro) on different gesture patterns for more robust and complex gesture recognition.

- State Machine: A more sophisticated state machine to track sequences of movements for complex gestures.

- Dynamic Thresholds: Adjust thresholds based on environmental noise or user calibration.

- Two-Way Communication: Implement a system where the receiver sends an acknowledgment back to the transmitter to confirm command reception.

- User Feedback: Add LEDs or a small buzzer to the transmitter unit to give feedback on gesture recognition and successful command transmission.

- Power Optimization: For the wearable transmitter, optimize power consumption by putting the Arduino and NRF24L01 into sleep modes between gesture checks.

- Mobile App Control: Instead of fixed gestures, you could build a mobile app that allows users to train custom gestures and associate them with commands, making the system more versatile.

- More Appliances: Expand the number of relays and commands to control more appliances.

- Safety Features: Implement emergency stop mechanisms or fail-safes for the home appliances.

- Enclosure: Design and 3D-print a comfortable, ergonomic enclosure for the wearable transmitter unit and a secure box for the receiver unit.

🚀 Ready to turn your passion for hardware into real-world innovation?

At Huebits, we don’t just teach Embedded Systems — we train you to build smart, connected, real-time solutions using the tech stacks that power today’s most advanced devices.

From microcontrollers to IoT deployments, you’ll gain hands-on experience building end-to-end systems that sense, compute, and communicate — built to thrive in the field, not just on paper.

🧠 Whether you're a student, aspiring embedded engineer, or future IoT architect, our Industry-Ready Embedded Systems & IoT Engineering Program is your launchpad.

Master C, Embedded C++, MicroPython, FreeRTOS, ESP32, STM32, and cloud integration with AWS IoT — all while working on real-world projects that demand precision, problem-solving, and execution.

🎓 Next Cohort Starts Soon!

🔗 Join Now and secure your place in the IoT revolution powering tomorrow’s ₹1 trillion+ connected economy.

3. ESP32-Based Weather Station with Web Dashboard

🧠 Tech Stack:

- Microcontroller: ESP32 (e.g., ESP32-WROOM-32 DevKitC) - Chosen for its integrated Wi-Fi and Bluetooth, dual-core processor, and ample GPIO pins.

- Sensors:

- DHT22: For accurate ambient temperature and humidity readings. (Alternative: BME280 for combined Temp/Humidity/Pressure, or DHT11 for basic, less accurate readings).

- BMP180 (or BMP280/BME280): For atmospheric pressure and additional temperature readings (BME280 is a strong upgrade as it combines all three parameters in one sensor).

- Optional Sensors:

- Light Sensor (LDR or BH1750): To measure ambient light intensity.

- Rain Sensor: To detect rainfall.

- UV Sensor: To measure UV index.

- Wind Speed/Direction Sensor: For advanced weather monitoring.

- Frontend/Web Technologies:

- HTML5: For structuring the web page.

- CSS3: For styling and layout of the dashboard.

- JavaScript: For dynamic content updates, data visualization (e.g., charts using libraries like Chart.js or D3.js), and asynchronous data fetching.

- Backend/Communication:

- ESP32 Web Server (AsyncWebServer library): To serve the web pages and handle API requests for sensor data.

- MQTT (Optional but Recommended): For robust, lightweight, and efficient data communication to a broker, enabling remote access and integration with other IoT platforms.

- Wi-Fi Connectivity: Built-in to the ESP32.

📦 Project Overview & Concept:

The ESP32-Based Weather Station with Web Dashboard is a comprehensive IoT project that integrates hardware for data acquisition with software for data visualization and remote access. The core concept is to create a localized weather monitoring system that can be accessed from any web browser on the same network (or remotely, if configured).

The system consists of:

- Hardware Module: An ESP32 microcontroller board connected to various environmental sensors (DHT22 for temperature/humidity, BMP180 for pressure). This module will be housed in a protective, weather-resistant enclosure if deployed outdoors.

- Embedded Firmware: The ESP32 will run custom firmware written in Arduino IDE (C++). This firmware will:

- Initialize and read data from the connected sensors at regular intervals.

- Establish and maintain a Wi-Fi connection to the local network.

- Host a basic web server. When a client (web browser) connects, it serves the HTML, CSS, and JavaScript files for the dashboard.

- Implement an API endpoint (e.g.,

/data) that, when requested by the JavaScript in the web page, sends the latest sensor readings in a structured format (e.g., JSON). - (Optional but Recommended) Publish sensor data to an MQTT broker, allowing the data to be easily consumed by other applications or cloud platforms.

- Web Dashboard: This is the user interface, developed using standard web technologies (HTML, CSS, JavaScript). It will be served directly from the ESP32's internal file system (or SPIFFS). The JavaScript code will periodically fetch updated sensor data from the ESP32's web server API and dynamically update the dashboard. This dashboard can display:

- Current temperature, humidity, and pressure readings.

- Historical graphs of these parameters over time.

- Status indicators (e.g., Wi-Fi connectivity).

This project brilliantly showcases the ESP32's capabilities as a powerful IoT device, demonstrating local data acquisition, network communication, and web hosting in a single, compact package.

📈 Why Build It: Benefits & Impact

Building an ESP32-Based Weather Station with Web Dashboard in 2025 offers a wealth of benefits for your skill set and portfolio:

- Seamless Integration of Embedded Systems, IoT, and Frontend: This project is a perfect example of a full-stack IoT application. You'll gain hands-on experience across hardware interfacing, embedded programming, network communication, and web development.

- Mastering the ESP32: You'll become proficient with the ESP32's Wi-Fi capabilities, web server functionalities, and sensor integration, which are fundamental skills for countless IoT applications.

- Real-Time Data Handling: Learning to collect, process, and present real-time sensor data is crucial for many industrial, environmental, and consumer IoT projects.

- Practical Web Development Experience: You'll apply HTML, CSS, and JavaScript in a practical context, understanding how web technologies interact with embedded devices. Experience with fetching and parsing JSON data from an API is also valuable.

- Understanding Networking Protocols: You'll delve into HTTP for web serving and potentially MQTT for lightweight messaging, essential for scalable IoT solutions.

- Low-Cost, High-Impact Project: The components are relatively inexpensive, but the project's scope and the skills it teaches are significant, making it an excellent return on investment for your learning.

- Portfolio Powerhouse: This project clearly demonstrates your ability to build a complete IoT solution from sensor to dashboard, highly attractive to employers in smart home, environmental monitoring, agriculture tech, and general IoT development roles.

- Scalability Potential: The foundation laid by this project can easily be extended to larger sensor networks or integrated with cloud platforms (e.g., AWS IoT, Google Cloud IoT, Adafruit IO, Thingspeak) for global data access and advanced analytics.

🏡 Use Cases:

The ESP32-Based Weather Station, though a single unit, has diverse applications and can be a stepping stone for more complex systems:

- Personal Home Weather Monitoring:

- Get precise local temperature, humidity, and pressure readings in your backyard or living room, more accurate than regional forecasts.

- Monitor indoor climate for optimal comfort or health (e.g., preventing mold due to high humidity).

- Gardening & Agriculture:

- Monitor microclimates in greenhouses or small garden plots to optimize watering and plant health.

- Inform decisions on planting and harvesting based on local conditions.

- Educational Tool:

- An excellent hands-on project for students to learn about IoT, sensors, microcontrollers, and web development.

- Visualize environmental data for science experiments.

- Environmental Monitoring (Small Scale):

- Setting up multiple stations in different locations to map out temperature or humidity variations across a small area (e.g., a campus, a park).

- Monitoring conditions in remote cabins or sheds.

- Data Logging & Analysis:

- Collect long-term environmental data for trend analysis, research, or historical comparison.

- Export data for use in spreadsheets or data science tools.

- Smart Home Integration (Advanced):

- Use the collected data to trigger other smart home devices (e.g., turn on a dehumidifier if humidity is too high, adjust thermostat based on indoor temperature). This would typically involve integrating with a home automation hub like Home Assistant or Node-RED.

- Proof of Concept for Industrial IoT (IIoT):

- Demonstrate the principles of collecting environmental data in industrial settings, such as server rooms (temperature, humidity) or manufacturing floors (pressure, vibration - with different sensors).

This project provides a robust foundation for anyone looking to make a significant mark in the burgeoning field of connected devices and data-driven solutions.

Project 3: ESP32-Based Weather Station with Web Dashboard Codes:

🔗 View Project Code on GitHubHow to Use and Set Up:

1. Arduino IDE Setup:

- Download and install the Arduino IDE if you haven't already.

- Install ESP32 Board Manager: Go to

File > Preferences, and in the "Additional Boards Manager URLs" field, add: https://raw.githubusercontent.com/espressif/arduino-esp32/gh-pages/package_esp32_index.json - Then, go to Tools > Board > Boards Manager..., search for "esp32", and install the "esp32 by Espressif Systems" package.

- Go to

Tools > Board > ESP32 Arduinoand select your specific ESP32 board (e.g., "ESP32 Dev Module" or "NodeMCU-32S"). - Go to

Tools > Portand select the serial port connected to your ESP32.

2. Install Libraries:

- Open the Arduino IDE.

- Go to Sketch > Include Library > Manage Libraries....

- Search for and install the following libraries:

AsyncTCP(by me-no-dev)ESPAsyncWebServer(by me-no-dev)Adafruit Unified Sensor(by Adafruit)DHT sensor library(by Adafruit)Adafruit BMP085 Library(for BMP180, by Adafruit)

- The

WiFi.handWire.hlibraries are built-in for ESP32.

3. Wiring:

- DHT22 Sensor:

- VCC to 3.3V or 5V (check your specific DHT22 module; most are compatible with 3.3V-5.5V).

- GND to GND.

- Data pin to ESP32 Digital Pin 16.

- Important: Add a 10K Ohm pull-up resistor between the Data pin and VCC.

- BMP180 Sensor (I2C):

- VCC to 3.3V.

- GND to GND.

- SDA to ESP32 Digital Pin 21 (SDA).

- SCL to ESP32 Digital Pin 22 (SCL).

4. Configure Wi-Fi Credentials:

- In the provided Canvas code, locate the lines:

C++

const char* ssid = "YOUR_SSID";const char* password = "YOUR_PASSWORD";

- Replace

"YOUR_SSID"with your actual Wi-Fi network name (SSID) and"YOUR_PASSWORD"with your Wi-Fi password. Make sure to keep the double quotes.

5. Upload the Code:

- Copy the entire code block from the Canvas into your Arduino IDE.

- Click the "Verify" button (checkmark icon) to compile the code.

- Click the "Upload" button (right arrow icon) to upload the code to your ESP32.

6. Access the Web Dashboard:

- After uploading, open the Serial Monitor (

Tools > Serial Monitor) with the baud rate set to115200. - The ESP32 will attempt to connect to your Wi-Fi. Once connected, it will print its assigned IP Address to the Serial Monitor (e.g.,

192.168.1.100). - Open a web browser on any device connected to the same Wi-Fi network as your ESP32.

- Enter the IP Address displayed in the Serial Monitor into your browser's address bar.

- You should now see your "ESP32 Weather Station Dashboard" displaying live sensor data. The chart will begin to populate with temperature readings.

Next Steps and Improvements:

- Custom Enclosure: Design and 3D-print a suitable enclosure for your ESP32 and sensors, especially if you plan to deploy it outdoors (ensure it's weather-resistant).

- Data Logging & History:

- SD Card Module: Add an SD card module to the ESP32 to log sensor data locally. This can act as a backup or for longer-term data collection, independent of the web server.

- Cloud Integration: Instead of just a local web dashboard, integrate with a cloud IoT platform like Adafruit IO, Thingspeak, or AWS IoT Core. This allows you to:

- Access data remotely from anywhere.

- Store historical data in the cloud.

- Create more advanced dashboards and alerts.

- Utilize cloud analytics services.

- More Sensors: Expand the system by adding other environmental sensors:

- Light Sensor (LDR or BH1750): To measure ambient light intensity.

- Rain Sensor: To detect rainfall.

- UV Sensor: To measure UV index.

- Wind Speed/Direction Sensors: For a more complete weather station.

- Sensor Calibration: For professional applications, calibrate your sensors against known accurate instruments to ensure precise readings.

- Power Optimization: If you plan to run the weather station on a battery, investigate ESP32's deep sleep or light sleep modes to conserve power between readings.

- User Interface Enhancements:

- Improve the web dashboard's aesthetics and responsiveness further.

- Add more interactive elements or data visualization types (e.g., gauges, historical data tables).

- Over-the-Air (OTA) Updates: Implement OTA firmware updates for your ESP32, allowing you to upload new code wirelessly without physically connecting it via USB.

- Notifications/Alerts: Set up email or push notifications (via a cloud service or a custom script) for specific conditions (e.g., temperature drops below freezing, humidity exceeds a threshold).

4. Smart Attendance System with RFID + Cloud Sync

🧠 Tech Stack:

- Microcontroller: NodeMCU / ESP8266 (e.g., ESP-12E module on a dev board) - Chosen for its integrated Wi-Fi capabilities, making cloud connectivity straightforward.

- RFID Module: RC522 RFID Reader/Writer module - Compatible with 13.56MHz MIFARE cards/tags.

- Cloud Database: Google Firebase Realtime Database or Cloud Firestore - For real-time data storage, synchronization, and accessibility from any internet-connected device.

- Optional Hardware:

- OLED/LCD Display (e.g., 0.96" I2C OLED): To show "Attendance Marked!" or "Access Denied."

- Buzzer/LEDs: For audio/visual feedback (e.g., green LED for success, red for failure, a short beep).

- RTC Module (DS3231): For highly accurate timestamps, especially if Wi-Fi is intermittent (though ESP's NTP sync is usually sufficient if connected).

- Software/Libraries:

- Arduino IDE with ESP8266 core.

MFRC522.hlibrary for RFID communication.Firebase-ESP-Clientlibrary for ESP8266/ESP32 Firebase integration.- (Optional) Basic web interface on ESP for configuration (e.g., Wi-Fi credentials).

- Frontend (for viewing data):

- Firebase Console (basic viewing).

- Custom web application (HTML/CSS/JS) or mobile app (Android/iOS) to display, manage, and analyze attendance data pulled from Firebase.

📦 Project Overview & Concept:

The Smart Attendance System with RFID + Cloud Sync is a modern, automated solution designed to streamline the process of marking attendance in various environments. It replaces traditional manual registers or biometric systems with a contactless, cloud-integrated approach.

The core concept involves:

- RFID Tag/Card Enrollment: Each authorized individual (student, employee, etc.) is assigned a unique RFID tag or card. Initially, these tags' unique IDs (UIDs) need to be registered in the system, typically by linking them to a person's name or ID in the Firebase database.

- Attendance Marking Unit: This is the main hardware component, consisting of the NodeMCU/ESP8266 and the RFID RC522 reader. When an individual taps their RFID card onto the reader:

- The RC522 reads the unique ID of the RFID tag.

- The NodeMCU processes this ID.

- It then communicates with the Google Firebase database over Wi-Fi.

- It checks if the scanned UID is registered and valid.

- If valid, it records an attendance entry (e.g., the UID, the current timestamp, and perhaps the device ID or location) in the Firebase database.

- Visual/auditory feedback (on OLED/LED/Buzzer) confirms whether the attendance was successfully marked or if there was an error.

- Cloud Synchronization & Data Access: The key feature is the real-time cloud sync with Google Firebase. All attendance records are instantly uploaded and stored securely. This allows:

- Real-time Monitoring: Administrators can view attendance live from anywhere using the Firebase console or a custom web/mobile application.

- Data Management: Easy retrieval, analysis, and generation of reports (e.g., daily attendance, latecomers, absenteeism).

- Scalability: Firebase handles the database infrastructure, allowing the system to scale from a few users to thousands without complex server management.

This project merges embedded hardware with robust cloud services, making it a powerful demonstration of a connected IoT solution.

📈 Why Build It: Benefits & Impact

Building a Smart Attendance System with RFID + Cloud Sync in 2025 offers significant benefits for your skill development and career:

- High Demand in Various Sectors: Automated attendance systems are critical for efficient operations in schools, universities, corporate offices, factories, and even public transport. This project addresses a real-world, pervasive need.

- Mastering Cloud Integration for IoT: This is arguably the most crucial benefit. You'll gain practical experience in connecting an embedded device directly to a powerful cloud platform (Firebase), understanding data schemas, authentication, and real-time database operations. This skill is universally valuable in the IoT domain.

- Understanding Wireless Communication (Wi-Fi): Working with the NodeMCU/ESP8266 solidifies your understanding of Wi-Fi networking from an embedded perspective, including connection management and error handling.

- RFID Technology Proficiency: You'll learn how RFID works, how to interface with an RFID reader, and how to use unique tag IDs for identification purposes, a skill applicable to access control, inventory management, and more.

- Robust System Design: You'll learn to design a system that is reliable (handles network drops, sensor errors), secure (basic authentication for Firebase), and user-friendly (feedback mechanisms).

- Full-Stack IoT Exposure: While the core project is embedded-focused, the need for cloud integration and data visualization implicitly introduces you to backend (Firebase) and frontend (web/app for viewing) concepts, giving you a broader "full-stack IoT" perspective.

- Problem-Solving & Debugging: Troubleshooting Wi-Fi connectivity, Firebase authentication, and data integrity issues will significantly sharpen your debugging skills.

- Portfolio Differentiator: This project stands out because it's a complete, functional, and cloud-connected solution. It directly appeals to companies in enterprise IoT, smart building, education technology, and access control industries.

🏫 Use Cases:

The applications of a Smart Attendance System with RFID + Cloud Sync are extensive and varied:

- Educational Institutions (Schools, Colleges, Universities):

- Student Attendance: Automate marking student attendance in classrooms, lecture halls, or labs.

- Staff Attendance: Track faculty and administrative staff presence.

- Library Access/Book Checkout: Use RFID for quick student ID verification and book tracking.

- Corporate Offices:

- Employee Attendance: Record office entry/exit times, manage shift timings.

- Meeting Room Access: Control access to specific meeting rooms based on employee roles.

- Visitor Management: Quickly register and track visitors using temporary RFID cards.

- Factories & Industrial Settings:

- Worker Time & Attendance: Precise tracking for payroll and shift management.

- Access Control: Granting/restricting access to specific zones or machinery based on employee authorization.

- Tool/Inventory Tracking: Mark tools or components with RFID tags and track their movement in/out of storage.

- Gyms & Fitness Centers:

- Member Check-in: Automate entry for members using RFID key fobs.

- Class Attendance: Track attendance for specific fitness classes.

- Event Management:

- Attendee Tracking: Efficiently check-in attendees at conferences, workshops, or concerts.

- Zone Access: Control access to VIP areas or restricted zones.

- Public Transport:

- Automated Ticketing: Passengers tap RFID cards for quick fare deduction and entry (e.g., metro cards, bus passes).

- Small Businesses/Startups:

- An affordable and scalable solution for managing employee presence without complex infrastructure.

This project is not just a proof of concept; it's a foundation for a deployable, practical system that addresses a widespread administrative need, showcasing a strong grasp of modern IoT principles.

Project 4: Smart Attendance System with RFID + Cloud Sync Codes:

🔗 View Project Code on GitHubHow to Use and Set Up:

1. Arduino IDE Setup:

- Download and install the Arduino IDE if you haven't already.

- Install ESP8266 Board Manager: Go to

File > Preferences, and in the "Additional Boards Manager URLs" field, add:https://arduino.esp8266.com/stable/package_esp8266com_index.json - Then, go to

Tools > Board > Boards Manager..., search for "esp8266", and install the "esp8266 by ESP8266 Community" package. - Go to

Tools > Board > ESP8266 Boardsand select your specific ESP8266 board (e.g., "NodeMCU 1.0 (ESP-12E Module)"). - Go to

Tools > Portand select the serial port connected to your ESP8266.

2. Install Libraries:

- Open the Arduino IDE.

- Go to Sketch > Include Library > Manage Libraries....

- Search for and install the following libraries:

Firebase ESP Client(by Mobizt)MFRC522(by Udo Klein or SparkFun, the default one should work)

- The

ESP8266WiFi.handSPI.hlibraries are built-in for ESP8266 boards.

3. Create a Firebase Project:

- Go to the Firebase Console.

- Click "Add project" and follow the steps to create a new Firebase project.

- Once your project is created, navigate to Realtime Database from the left-hand menu.

- Click "Create database." Choose a location and start in "locked mode" (you'll modify rules later).

- Get your Firebase Host: The Firebase Host is typically your project ID followed by

.firebaseio.com(e.g.,your-project-id-12345.firebaseio.com). You can find this in your project settings or the Realtime Database URL. - Get your Firebase Web API Key: Go to "Project settings" (gear icon next to "Project overview"), then "General," and find your "Web API Key."

4. Configure Firebase Realtime Database Rules:

- In your Firebase Realtime Database, go to the "Rules" tab.

- For initial testing, you can temporarily set the rules to allow anonymous reads and writes (though not recommended for production without proper authentication):

JSON

{ "rules": { ".read": true, ".write": true }}- Click "Publish."

5. Wiring:

- RC522 RFID Module to NodeMCU ESP8266:

- SDA (SS) to NodeMCU D4 (GPIO2)

- SCK to NodeMCU D5 (GPIO14)

- MOSI to NodeMCU D7 (GPIO13)

- MISO to NodeMCU D6 (GPIO12)

- RST to NodeMCU D3 (GPIO0)

- GND to GND

- VCC to 3.3V (RC522 modules are 3.3V tolerant)

- LED Indicators (Optional):

- Green LED (Success): Anode (+) to NodeMCU D1 (GPIO5) via a 220 Ohm resistor, Cathode (-) to GND.

- Red LED (Failure/Error): Anode (+) to NodeMCU D2 (GPIO4) via a 220 Ohm resistor, Cathode (-) to GND.

- Buzzer (Optional):

- Positive (+) to NodeMCU D0 (GPIO16) via a 220 Ohm resistor, Negative (-) to GND.

6. Configure Wi-Fi & Firebase Credentials in Code:

- In the Arduino IDE, open the code from the Canvas.

- Replace

"YOUR_SSID"and"YOUR_PASSWORD"with your actual Wi-Fi network credentials. - Replace

"YOUR_PROJECT_ID.firebaseio.com"with your Firebase Host URL. - Replace

"YOUR_FIREBASE_WEB_API_KEY"with your Firebase Web API Key.

7. Upload the Code:

- Click the "Verify" button (checkmark icon) to compile the code.

- Click the "Upload" button (right arrow icon) to upload the code to your NodeMCU ESP8266.

8. Monitor and Test:

- Open the Serial Monitor (

Tools > Serial Monitor) with the baud rate set to115200. - Observe the ESP8266 connecting to Wi-Fi.

- Once connected, place an RFID card/tag on the RC522 reader.

- You should see the UID printed in the Serial Monitor, and a "Attendance marked successfully on Firebase!" message if successful. The green LED will flash and the buzzer will beep.

- Check your Firebase Realtime Database in the console. You should see a new entry under

/attendance_records/<your_UID>/<timestamp>/with the status and device ID.

Next Steps and Improvements:

- User Management & Registration:

- Currently, any scanned RFID UID is accepted. Implement a system to "register" UIDs with corresponding user names (e.g., student names, employee IDs). You could store these mappings in another Firebase path (e.g.,

/registered_users/<UID>/name). - Modify the code to check if a scanned UID exists in your

registered_userslist before marking attendance.

- Currently, any scanned RFID UID is accepted. Implement a system to "register" UIDs with corresponding user names (e.g., student names, employee IDs). You could store these mappings in another Firebase path (e.g.,

- Frontend Dashboard:

- Create a simple web application (using HTML, CSS, JavaScript, and Firebase Web SDK) or a mobile application (Android/iOS) to display the attendance data in a user-friendly format. This could include:

- A list of attendees with timestamps.

- Filtering by date or UID.

- Attendance summaries (e.g., number of present/absent).

- This is where the "cloud sync" truly shines, enabling remote monitoring and powerful data analysis.

- Create a simple web application (using HTML, CSS, JavaScript, and Firebase Web SDK) or a mobile application (Android/iOS) to display the attendance data in a user-friendly format. This could include:

- Authentication & Security:

- Crucial for Production: Implement proper Firebase Authentication (e.g., Email/Password, Google Sign-In) to secure your database. Modify the ESP8266 code to authenticate with Firebase using user credentials or custom tokens.

- Refine Firebase Realtime Database rules to restrict access only to authenticated users and specific data paths.

- Offline Capability:

- If Wi-Fi is occasionally unavailable, implement local storage (e.g., using ESP8266's EEPROM or SPIFFS) to temporarily store attendance records. Once Wi-Fi is restored, upload the cached data to Firebase.

- Time Synchronization:

- While

Firebase.getCurrentTimestamp()uses the server's time, for absolute accuracy and consistency with local timezones, consider adding NTP (Network Time Protocol) synchronization to the ESP8266.

- While

- Entry/Exit Tracking:

- Modify the logic to differentiate between "check-in" and "check-out" events. This might involve tracking the last action for each UID or having separate readers for entry and exit points.

- Power Management:

- For battery-powered deployments, implement power-saving modes (e.g., deep sleep) for the ESP8266 when not actively scanning or transmitting.

- Hardware Improvements:

- Integrate a small OLED display on the NodeMCU to show "Welcome <Name>!" or "Access Denied."

- Add a real-time clock (RTC) module (like DS3231) if you need precise local timestamps even without a Wi-Fi connection.

🚀 Ready to turn your passion for hardware into real-world innovation?

At Huebits, we don’t just teach Embedded Systems — we train you to build smart, connected, real-time solutions using the tech stacks that power today’s most advanced devices.

From microcontrollers to IoT deployments, you’ll gain hands-on experience building end-to-end systems that sense, compute, and communicate — built to thrive in the field, not just on paper.

🧠 Whether you're a student, aspiring embedded engineer, or future IoT architect, our Industry-Ready Embedded Systems & IoT Engineering Program is your launchpad.

Master C, Embedded C++, MicroPython, FreeRTOS, ESP32, STM32, and cloud integration with AWS IoT — all while working on real-world projects that demand precision, problem-solving, and execution.

🎓 Next Cohort Starts Soon!

🔗 Join Now and secure your place in the IoT revolution powering tomorrow’s ₹1 trillion+ connected economy.

5. Automated Plant Irrigation System

🧠 Tech Stack:

- Microcontroller: Arduino Uno (or Arduino Nano/ESP32/ESP8266 for more compact or connected versions)

- Sensors:

- Soil Moisture Sensor: Capacitive soil moisture sensor (recommended over resistive for longer lifespan and better accuracy). Examples: FC-28, STEMMA Soil Sensor.

- Optional Sensors for Advanced Systems:

- DHT11/DHT22 (Temperature & Humidity Sensor): To account for environmental evaporation rates.

- Light Sensor (LDR/BH1750): To detect day/night cycles or sufficient light for plant growth.

- Water Level Sensor: To monitor the water reservoir level.

- Actuators:

- Relay Module: 1-channel or 2-channel relay module (to switch the water pump).

- DC Water Pump: Small 5V or 12V submersible pump (e.g., mini water pump for aquariums, or a peristaltic pump for precise dosing).

- Power Supply:

- External 12V or 5V power supply for the pump (depending on pump voltage).

- USB power or a dedicated power adapter for the Arduino.

- Connectivity (Optional for Advanced Systems):

- ESP8266/ESP32 (if using these MCUs instead of Uno): For Wi-Fi connectivity to send alerts, log data, or enable remote control via a web dashboard/app.

- Plumbing:

- Small tubing/hoses

- Water reservoir (e.g., bucket, plastic container)

📦 Project Overview & Concept:

The Automated Plant Irrigation System is designed to take the guesswork and manual effort out of watering plants. It leverages sensor technology to determine the real-time moisture content of the soil and intelligently activates a water pump only when necessary, ensuring plants receive optimal hydration without over or under-watering.

The core concept is a closed-loop control system:

- Soil Moisture Sensing: A soil moisture sensor is inserted into the plant's soil. This sensor continuously (or periodically) measures the electrical conductivity or capacitance of the soil, which correlates directly to its moisture content. The Arduino reads the analog or digital output from this sensor.

- Threshold-Based Decision Making: The Arduino's firmware is programmed with a predefined "dryness threshold." This threshold represents the minimum acceptable moisture level for the plant.

- Automated Irrigation:

- If the sensor reading falls below the set threshold, indicating dry soil, the Arduino triggers the relay module.

- The relay then switches on the water pump, drawing water from a reservoir and delivering it to the plant through tubing.

- The pump runs for a specific duration or until the soil moisture sensor indicates that the optimal moisture level has been reached.

- Once the desired moisture is achieved, the Arduino deactivates the relay, turning off the water pump.

- Feedback & Logging (Optional): An optional LCD or OLED screen can display the current soil moisture level, pump status, and last watering time. If using an ESP-based microcontroller, this data can be logged to a cloud platform or local web dashboard, and alerts can be sent (e.g., "Water reservoir low!").

This system is an excellent entry point into basic automation, sensor-actuator interaction, and the principles of feedback control.

📈 Why Build It: Benefits & Impact

Building an Automated Plant Irrigation System in 2025 is highly beneficial for both personal learning and its relevance to current technological trends:

- Agriculture Meets Automation – Smart Farming: This project directly taps into the burgeoning field of Smart Agriculture (AgriTech). As global food demand rises, efficient and sustainable farming practices are crucial. Automated irrigation systems are a cornerstone of precision agriculture, reducing water waste and optimizing yields.

- Resource Conservation (Water Efficiency): The system ensures that water is used only when and where it's needed, preventing overwatering and significantly conserving water, a critical resource in many parts of the world. This aligns with environmental sustainability goals.

- Time and Labor Saving: For individual plant enthusiasts, home gardeners, or small-scale farmers, it automates a repetitive task, freeing up time and ensuring plants are cared for even when the user is away.

- Hands-on Embedded Systems Fundamentals: You'll gain practical experience in:

- Sensor Interfacing: Reading analog data from a soil moisture sensor.

- Actuator Control: Using relays to switch high-power devices (pumps) safely from a microcontroller.

- Conditional Logic & Control Loops: Implementing

if-elsestatements andwhileloops for intelligent decision-making. - Basic Calibration: Understanding how to calibrate sensor readings to real-world moisture levels.

- Power Management: Considering different power sources for the Arduino and the pump.

- Introduction to IoT (if using ESP): Upgrading to an ESP32/ESP8266 transforms this into an IoT project, teaching you about:

- Wi-Fi connectivity.

- Sending data to cloud platforms (e.g., Thingspeak, Adafruit IO).

- Remote monitoring and control via web/mobile apps.

- Setting up notifications (e.g., via email, push notifications).

- Scalability and Customization: The basic concept can be easily scaled for multiple plants/zones with more sensors and relays, or customized with additional features like weather integration, nutrient dosing, or light control.

- Problem-Solving Skills: You'll face challenges like sensor calibration, pump priming, hose leaks, and potentially managing multiple plants with different watering needs, all of which hone your problem-solving abilities.

- Portfolio Project: A functional automated irrigation system is a tangible, practical project that demonstrates a clear understanding of embedded control, sensor applications, and potentially IoT, making it attractive to employers in agriculture tech, smart home, and general automation industries.

🌿 Use Cases:

The applications of an Automated Plant Irrigation System are wide-ranging:

- Home & Hobby Gardening:

- Indoor Plants: Ensure consistent watering for houseplants, especially when on vacation.

- Small Gardens/Balcony Gardens: Automate watering for vegetable patches, herb gardens, or flower beds.

- Greenhouses: Maintain optimal moisture levels for delicate plants or seedlings.

- Small-Scale Agriculture & Urban Farming:

- Hydroponics/Aeroponics (with modifications): Controlling nutrient solution delivery based on plant needs.

- Community Gardens: Centralized irrigation for shared plots.

- Educational Purposes:

- A hands-on project for teaching basic electronics, programming, and environmental science concepts.

- Demonstrating feedback control systems in action.

- Scientific Experiments:

- Maintaining consistent soil moisture conditions for plant growth experiments.

- Studying the effects of different watering regimes on plant health.

- Proof of Concept for Larger Systems:

- Serving as a small-scale prototype for commercial smart irrigation systems in large farms, vineyards, or public parks.

- Integration into larger smart home ecosystems.

- Vertical Farms: Optimizing water delivery for plants grown in vertical stacks.

This project is not just about making a plant happy; it's about building a foundational understanding of intelligent environmental control, a skill set increasingly vital across numerous industries.

Project 5: Automated Plant Irrigation System Codes:

🔗 View Project Code on GitHubHow to Use and Set Up:

1. Arduino IDE Setup:

- Download and install the Arduino IDE if you haven't already.

- Go to

Tools > Board > Arduino AVR Boardsand selectArduino Uno. - Go to

Tools > Portand select the serial port connected to your Arduino Uno.

2. Install Libraries:

- This project uses basic Arduino functions, so no special libraries are required beyond what's built into the IDE.

3. Wiring:

- Follow the detailed wiring guide provided in the comments within the code.

- Crucially, pay attention to the water pump's power supply. A separate power supply for the pump is highly recommended to protect your Arduino from high current draw. The relay acts as a switch for this external power.

- Ensure your relay module's

INpin logic (active HIGH or active LOW) matches thedigitalWritecommands in the code. The provided code assumes an active-LOW relay (meaningLOWturns the relay ON).

4. Calibrate Soil Moisture Thresholds:

- This is the most important step for proper functionality. The

SOIL_MOISTURE_DRY_THRESHOLDandSOIL_MOISTURE_WET_THRESHOLDvalues are specific to your sensor, soil type, and plant needs. - Calibration Process:

- Upload the code to your Arduino without connecting the pump yet.

- Open the Serial Monitor (

Tools > Serial Monitor) with the baud rate set to9600. - Place your soil moisture sensor in bone-dry soil. Note the

Soil Moisture Valuedisplayed in the Serial Monitor. This will be your approximateSOIL_MOISTURE_DRY_THRESHOLD. - Place your soil moisture sensor in fully saturated (very wet) soil. Note the

Soil Moisture Value. This will be your approximateSOIL_MOISTURE_WET_THRESHOLD. - Adjust the

SOIL_MOISTURE_DRY_THRESHOLD(e.g., slightly below your dry reading) andSOIL_MOISTURE_WET_THRESHOLD(e.g., slightly above your wet reading) in the code. The dry threshold should always be a higher analog value than the wet threshold, as most capacitive sensors give higher readings for drier soil. - Test by letting the soil dry out naturally and observe when the "Soil is DRY" message appears. Then, add water and observe when "Soil is WET enough" appears. Refine thresholds as needed.

5. Upload the Code:

- Copy the entire code block into your Arduino IDE.

- Click the "Verify" button (checkmark icon) to compile the code.

- Click the "Upload" button (right arrow icon) to upload the code to your Arduino Uno.

6. Test the System:

- Once uploaded and wired correctly (including the pump with its external power supply), let your plant's soil dry naturally.

- When it reaches the dry threshold, the pump should activate.

- The pump should continue running until the soil moisture sensor detects that the soil has reached the wet threshold, at which point the pump will turn off.

Next Steps and Improvements:

- IoT Integration (using ESP32/ESP8266 instead of Uno):

- Upgrade to an ESP32 or ESP8266 board. This allows you to add Wi-Fi connectivity.

- Remote Monitoring: Send soil moisture data to a cloud platform (e.g., Thingspeak, Adafruit IO, AWS IoT Core) to monitor your plant's health from anywhere.

- Remote Control: Add a web interface or mobile app to remotely activate/deactivate the pump or change irrigation thresholds.

- Alerts: Send email or push notifications if the water reservoir is low (needs a water level sensor) or if the plant hasn't been watered for too long.

- Multiple Plants/Zones:

- Extend the system to monitor and irrigate multiple plants or different zones, each with its own soil moisture sensor and dedicated pump/valve (or a multi-channel valve system).

- Water Level Monitoring:

- Add a water level sensor to your reservoir. If the water level drops too low, send an alert to refill the reservoir and/or prevent the pump from running dry.

- Advanced Scheduling:

- Incorporate a Real-Time Clock (RTC) module (like DS3231) to allow irrigation based on time-of-day schedules in addition to soil moisture.

- Add a light sensor to irrigate only during daylight hours or specific light conditions.

- Nutrient Dosing:

- For advanced hydroponics or growth systems, integrate a peristaltic pump to automatically dose liquid nutrients based on a schedule or sensor readings (e.g., pH, EC sensors).

- User Interface:

- Add a small LCD or OLED display to show current soil moisture, pump status, and last watering time directly on the device.

- Historical Data Logging:

- If not using cloud integration, add an SD card module to log sensor data locally for later analysis.

- Power Optimization:

- For battery-powered operation, implement low-power sleep modes for the microcontroller.

6. Voice-Controlled Robot Using Arduino & Bluetooth

🧠 Tech Stack:

- Microcontroller: Arduino Uno (or Arduino Nano/Mega for more GPIOs if needed)

- Bluetooth Module: HC-05 Bluetooth Module (Master/Slave configurable, ideal for communication with Android). An HC-06 (Slave only) could also work if the Android app is always the master.

- Motor Driver: L298N Motor Driver Module (to control DC motors, capable of driving two motors independently with speed control). Alternatively, a simpler L293D if using smaller motors.

- Motors: 2 or 4 DC Gear Motors (e.g., standard yellow TT motors)

- Chassis: 2-wheel drive or 4-wheel drive robot chassis with wheels.

- Power Supply:

- 9V battery for Arduino Uno (or external power adapter).

- Separate higher voltage battery pack (e.g., 4x AA batteries or a LiPo battery) for the motors (connected to the L298N), ensuring sufficient current.

- Smartphone: Android device with a compatible voice recognition app (e.g., "Arduino Bluetooth RC," "Bluetooth Voice Control," or a custom-built app using Android's speech-to-text API).

- Optional Enhancements:

- LEDs: For status indicators (e.g., connected, moving).

- Buzzer: For auditory feedback.

- Ultrasonic Sensor (HC-SR04): For obstacle avoidance, adding autonomy.

📦 Project Overview & Concept:

The Voice-Controlled Robot Using Arduino & Bluetooth is an exciting, interactive robotics project that combines embedded control with wireless communication and basic voice recognition. The core idea is to command a mobile robot's movements (forward, backward, left, right, stop) by speaking into a smartphone.

The system is split into two main parts:

1. The Control Unit (Android Smartphone):

- A dedicated Android application (either pre-built or custom-developed) is used.

- This app utilizes the smartphone's built-in voice recognition capabilities (Google Speech-to-Text).

- When the user speaks a command (e.g., "forward," "stop," "left"), the app converts the speech into a text string.

- This text command is then transmitted wirelessly from the smartphone to the robot's Bluetooth module.

2. The Robot Unit (Arduino with Bluetooth & Motors):

- The Arduino Uno acts as the robot's brain. It's connected to the HC-05 Bluetooth module and an L298N motor driver.

- The HC-05 module receives the text command from the Android app via Bluetooth.

- The Arduino reads this incoming command from the Bluetooth module's serial interface.

- Based on the received command (e.g., "forward"), the Arduino sends appropriate signals to the L298N motor driver.

- The L298N, in turn, controls the direction and speed of the DC motors, making the robot move as commanded.

- Different commands will trigger different motor actions (e.g., "stop" halts motors, "left" turns one motor off and keeps the other on, or runs them in opposite directions).

This project brilliantly demonstrates the integration of mobile technology with embedded hardware to create an intuitive and responsive robotic system.

📈 Why Build It: Benefits & Impact

Building a Voice-Controlled Robot in 2025 offers a unique blend of fun, interaction, and deep technical learning:

- Fun, Interactive, and Visually Impressive: This project is inherently engaging. Seeing a robot respond to your voice commands is incredibly satisfying and makes for an excellent demonstration piece in your portfolio or at science fairs.

- Showcasing Wireless Control: You gain hands-on experience with Bluetooth communication, a fundamental technology for many IoT and mobile-connected embedded devices. Understanding pairing, data transmission, and serial communication protocols is key.

- Introduction to Robotics & Kinematics: You'll learn the basics of robot locomotion, how to control DC motors for movement, and the principles of differential drive (if using a 2-wheel robot).

- Voice Recognition Integration: While the Android app handles the complex speech-to-text, you learn how an embedded system receives and interprets text commands originating from a voice interface. This is a crucial step towards more sophisticated voice assistants and smart devices.

- Hands-on with Motor Drivers & Power Management: You'll work with motor drivers, understanding how they translate low-power microcontroller signals into high-current control for motors. You'll also learn the importance of separate power sources for the microcontroller and motors.

- Algorithmic Thinking: Developing the Arduino code requires precise control logic for different movements and careful parsing of incoming voice commands.

- Problem-Solving & Debugging: Troubleshooting Bluetooth connectivity issues, motor wiring, and command parsing will significantly enhance your debugging skills across hardware and software.

- Portfolio Differentiator: A functional voice-controlled robot is an exciting and memorable project that stands out on a resume. It demonstrates practical skills in embedded systems, robotics, wireless communication, and human-machine interaction, appealing to companies in robotics, automation, and consumer electronics.

🤖 Use Cases:

Beyond the cool factor, the principles learned from a voice-controlled robot have broader applications:

- Educational Robotics Platform:

- An excellent introductory project for students learning about robotics, programming, and electronics.

- Can be adapted for STEM workshops or school competitions.

- Assistive Technology (Conceptual Basis):

- The core concept of voice control for movement can be scaled up for assistive devices for individuals with mobility impairments (e.g., voice-controlled wheelchairs or robotic arms).

- Basic Home/Office Automation (Mobile Robots):

- A simple prototype for a robot that can retrieve items or perform basic tasks in a home or office environment based on verbal commands.

- Industrial Training & Simulation:

- Small-scale models for demonstrating robotic control principles without needing full-sized industrial robots.

- Interactive Toys & Entertainment:

- The basis for more advanced, interactive robotic toys or companions.

- Remote Control & Telepresence:

- While this project uses voice, the wireless communication framework can be extended to transmit other types of commands for remote control of devices or telepresence robots.

- Proof of Concept for Navigation Systems:

- Adding an ultrasonic sensor transforms it into a robot that can navigate and avoid obstacles while still responding to voice commands for higher-level directions.

This project is not just a toy; it's a foundational step into the fascinating world of human-robot interaction and mobile robotics.

Project 6: Voice-Controlled Robot Using Arduino & Bluetooth Codes:

🔗 View Project Code on GitHubHow to Use and Set Up:

1. Arduino IDE Setup:

- Download and install the Arduino IDE if you haven't already.

- Go to

Tools > Board > Arduino AVR Boardsand selectArduino Uno. - Go to

Tools > Portand select the serial port connected to your Arduino Uno.

2. Install Libraries:

- This project primarily uses the

SoftwareSeriallibrary, which is built into the Arduino IDE, so no extra installation is needed.

3. Wiring:

- Follow the detailed wiring guide provided in the comments within the code. Pay critical attention to power connections for the L298N motor driver and motors.

- Common Ground: Ensure that the GND of your Arduino, the GND of your L298N, and the negative terminal of your motor battery are all connected together. This is crucial for proper operation.

- Voltage Divider (HC-05 optional): If your HC-05 module's RX pin is not 5V tolerant (meaning it explicitly states 3.3V input only), you must use a voltage divider on the Arduino's TX (Digital Pin 11) line going to the HC-05's RX pin. A simple one can be a 1k Ohm resistor in series with the Arduino TX, followed by a 2k Ohm resistor from that point to GND. The connection to the HC-05 RX would be between the two resistors. Many HC-05 breakout boards already include this.

4. Upload the Code:

- Copy the entire code block into your Arduino IDE.

- Click the "Verify" button (checkmark icon) to compile the code.

- Click the "Upload" button (right arrow icon) to upload the code to your Arduino Uno.

- Important: Disconnect the HC-05's TXD pin from Arduino Digital Pin 10 before uploading the code, as SoftwareSerial pins can interfere with the upload process. Reconnect it after the upload is complete.

5. Pair with Android Phone:

- Power up your Arduino and HC-05 module. The HC-05's LED should be blinking rapidly (indicating it's in pairing mode).

- On your Android phone, go to Bluetooth settings and search for new devices.

- You should see a device named "HC-05" (or similar). Pair with it.

- The default PIN for HC-05 is usually

1234or0000. - Once paired, the HC-05's LED should blink slowly (indicating a successful connection).

6. Use an Android Voice Command App:

- Download a Bluetooth serial terminal app with voice input from the Google Play Store. Examples include "Arduino Bluetooth RC," "Bluetooth Voice Control," or simply "Bluetooth Serial Controller."

- Open the app and connect to your paired "HC-05" device.

- Look for a voice input or microphone icon within the app.

- Speak the commands: "FORWARD", "BACKWARD", "LEFT", "RIGHT", "STOP". Ensure these are spoken clearly and match the

toUpperCase()versions in the Arduino code. The app will convert your voice to text and send it via Bluetooth serial.

7. Monitor Output:

- Open the Serial Monitor (

Tools > Serial Monitor) with the baud rate set to9600on your computer. This will show you the commands received by the Arduino and the actions it's taking.

Next Steps and Improvements:

- More Robust Voice Command Handling:

- Keyword Spotting: Implement more flexible voice parsing on the Arduino side, allowing for variations like "Go forward," "Move ahead," etc., all mapping to "FORWARD."

- Confidence Thresholds: In your Android app, if you build a custom one, you can get confidence scores for voice recognition. Only send commands that meet a certain confidence level to avoid misinterpretations.

- Obstacle Avoidance:

- Integrate an Ultrasonic Sensor (HC-SR04) and modify the Arduino code to make the robot autonomously avoid obstacles while still responding to voice commands for general direction. The robot would check for obstacles first, then obey voice commands if the path is clear.

- Mobile Application Development:

- Develop a custom Android app (using Android Studio) for a more tailored user experience. This app could feature a custom UI, visual feedback, and more advanced voice command processing.

- Power Management: