🛠️ The Top 10 ETL Projects to Master in 2025

📊 Introduction: Why ETL Matters More Than Ever in 2025

In 2025, the notion of data as a mere byproduct has long been discarded; it is now unequivocally the currency of innovation. From the meticulous operations of real-time health monitoring systems that track vital signs in milliseconds, to the intricate logic powering automated retail forecasting engines that predict consumer demand with unprecedented accuracy, ETL (Extract, Transform, Load) pipelines stand as the unseen, yet indispensable, backbone of every intelligent system.

Whether you're orchestrating the migration of petabytes across sprawling cloud data lakes, or meticulously cleansing chaotic API responses to feed a cutting-edge machine learning model, ETL is the alchemical process by which raw, disparate data is meticulously refined and transmuted into decision-ready intelligence. It's the critical bridge between data accumulation and actionable insight.

But here’s the crucial twist that defines the current landscape: the industry is no longer merely seeking "data enthusiasts." The demand has shifted dramatically towards builders — individuals who possess the rare blend of theoretical understanding and practical acumen to engineer pipelines that are robust, inherently scalable, and truly production-grade. Companies are looking for those who can move beyond conceptual diagrams to deploy solutions that perform reliably under immense pressure, consistently deliver high-quality data, and can evolve with the ever-changing demands of the business.

This blog isn’t merely a compendium of ideas. It is designed as your definitive blueprint to becoming an ETL architect. We've painstakingly handpicked 10 project ideas, each meticulously crafted to blend real-world complexity with immediate, hands-on relevance. These aren't academic exercises; they are tangible, impactful projects that are perfect for fortifying your portfolio, enriching your resume, or adding substantial depth to your GitHub README.

Whether you aspire to be a trailblazing data engineer, a cutting-edge machine learning practitioner, or a foundational backend developer — these are the projects that will demonstrably prove your capability to move data with intent and intelligence. They showcase not just what you know, but what you can build and deliver in a rapidly accelerating data economy.

"Let’s build, and in doing so, redefine the future of data"

Further Considerations for 2025 Context:

- Rise of Real-time & Streaming: The emphasis on "real-time" isn't just a buzzword; it's a fundamental shift. Traditional batch ETL is still relevant for certain use cases, but event-driven architectures, Kafka, Flink, and other streaming technologies are now central to modern ETL.

- Cloud-Native and Serverless: The pervasive adoption of cloud platforms (AWS, Azure, GCP) means ETL pipelines are increasingly leveraging serverless functions, managed services (e.g., AWS Glue, Azure Data Factory, GCP Dataflow), and auto-scaling capabilities. Building for cost-efficiency and elasticity in the cloud is paramount.

- Data Mesh and Data Fabric Architectures: Organizations are moving towards decentralized data ownership and consumption. ETL architects need to understand how their pipelines fit into these broader architectural paradigms, often involving data products, self-service capabilities, and robust data governance.

- AI/ML Integration: ETL is no longer just about moving data for reporting. It's about preparing and delivering data optimized for machine learning models, often involving feature engineering directly within the pipeline, and sometimes even incorporating ML-driven anomaly detection or quality checks within the ETL process itself.

- Data Governance and Observability: With increasing regulatory scrutiny and the sheer volume of data, robust data governance (metadata management, data lineage, quality checks) and observability (monitoring, alerting, logging) are non-negotiable aspects of production-grade ETL.

- Low-Code/No-Code Tools vs. Custom Code: While many commercial tools offer low-code/no-code ETL, the ability to write custom, optimized code (Python, Scala) for complex transformations, custom connectors, and performance tuning remains a highly valued skill for "builders."

- DataOps: The principles of DataOps, bringing DevOps methodologies to data, are essential. This includes automation of testing, deployment, and monitoring of ETL pipelines, fostering collaboration between data engineers, data scientists, and operations teams.

Table of Content:

🔄 1. Real-Time Crypto Market Tracker

📌 Project Overview:

The Real-Time Crypto Market Tracker is a robust and scalable ETL (Extract, Transform, Load) pipeline engineered to continuously ingest live cryptocurrency price data from various exchanges. This ingested raw data undergoes real-time transformation into actionable insights, which are then meticulously visualized on a dynamic, live dashboard. The project serves as a practical, hands-on simulation of the complex infrastructure that underpins leading trading platforms and advanced fintech dashboards, demonstrating their operational capabilities at scale. Its core objective is to provide immediate, up-to-the-second market intelligence, enabling users to react swiftly to price fluctuations and market momentum.

⚙️ Tech Used:

- Language: Python

- Rationale: Python's extensive ecosystem, rich libraries, and ease of use make it an ideal choice for data engineering, real-time data processing, and web application development (via Streamlit). Its strong community support and readability contribute to efficient development and maintenance.

- Streaming Source: WebSocket APIs (Binance, Coinbase)

- Rationale: WebSocket APIs are crucial for real-time data streaming due to their persistent, full-duplex communication channels. Unlike traditional HTTP requests, WebSockets maintain an open connection, allowing immediate push notifications of price changes, volume updates, and other market events without constant polling. This ensures minimal latency and high data freshness.

- Database: PostgreSQL

- Rationale: PostgreSQL is chosen for its robust support for time-series data, advanced indexing capabilities (e.g., BRIN, GiST for time-based queries), and its reliability as a relational database. It provides ACID compliance, ensuring data integrity for financial information. Its ability to handle large volumes of data and complex queries makes it suitable for storing historical price snapshots and calculated metrics.

- Visualization: Streamlit

- Rationale: Streamlit allows for rapid development of interactive web applications and dashboards purely in Python, eliminating the need for front-end development expertise (HTML, CSS, JavaScript). It's ideal for quickly prototyping and deploying data applications, making it perfect for a live, real-time market tracker where immediate visual feedback is paramount.

Libraries:

- Pandas: Essential for data manipulation and analysis, particularly for handling time-series data, cleaning, aggregation, and preparing data for visualization.

- SQLAlchemy: Provides an Object-Relational Mapper (ORM) that simplifies interaction with the PostgreSQL database, allowing developers to work with Python objects instead of raw SQL queries, improving code readability and maintainability.

- websockets: A low-level library for building WebSocket clients and servers in Python, enabling efficient and reliable communication with cryptocurrency exchange APIs.

- datetime: Python's built-in module for handling dates and times, crucial for timestamping data, calculating time-based metrics (e.g., rolling averages), and managing data retention policies.

💼 Use Cases:

- Crypto Trading Dashboards: The core application, offering retail and institutional traders a live view of market movements, empowering informed decision-making.

- Real-Time Financial Alerts: Triggers for price thresholds, significant volume changes, or sudden market shifts, delivered via email, SMS, or in-app notifications.

- AI-Based Trading Bot Triggers: The real-time data stream and calculated indicators can directly feed into automated trading algorithms, enabling quick execution of buy/sell orders based on predefined strategies.

- Market Volatility Analysis: Provides the foundational data to analyze and visualize market volatility, helping traders and analysts understand risk and potential price swings.

- Educational Dashboards for Financial Literacy Platforms: Offers a practical, interactive tool for students and learners to observe real-world market dynamics without financial risk, fostering a deeper understanding of financial concepts.

- Algorithmic Strategy Backtesting: The stored historical data in PostgreSQL can be leveraged to backtest various trading strategies, optimizing parameters before live deployment.

- Regulatory Compliance & Auditing: The timestamped data can serve as an immutable record of market activity, aiding in compliance reporting and auditing processes.

📈 Impact / ROI:

- Delivers Instant Insights into Crypto Price Swings: By processing and visualizing data in real-time, users gain immediate awareness of market shifts, allowing for proactive responses. This direct impact on decision-making can lead to improved trading outcomes.

- Enables Latency-Aware Trading Models: The low-latency data pipeline is critical for high-frequency trading (HFT) and algorithmic strategies where even milliseconds matter. This project provides the foundational infrastructure for developing and deploying such models.

- Boosts User Engagement on Finance Platforms Through Real-Time Metrics: Interactive and dynamic dashboards featuring live data significantly enhance user experience, leading to increased time spent on platforms and higher retention rates.

- Can be Monetized as a Backend for Fintech Dashboards or Crypto Tools: The robust ETL pipeline can be offered as an API service to other financial applications, generating revenue through data subscriptions or licensing agreements.

- Reduces Information Asymmetry: By providing accessible real-time data, the project helps democratize market information, empowering a broader audience.

- Accelerates Data-Driven Decision Making: From individual traders to institutional analysts, the immediate availability of insights fosters a culture of data-informed choices.

🌐 Real-World Example:

This project is a functional, simplified replica of the underlying data infrastructure and display mechanisms employed by prominent financial data aggregators and trading platforms. It mirrors the core functionality of tools like:

- CoinMarketCap: Known for its comprehensive listing of cryptocurrencies, live price tracking, market capitalization, and historical data.

- TradingView: A popular charting platform that provides real-time market data, advanced technical analysis tools, and social networking for traders.

- CryptoCompare: Offers cryptocurrency data, news, and guides, including live prices, exchange information, and portfolio tracking.

The Real-Time Crypto Market Tracker demonstrates how these platforms acquire, process, and present live updates on market trends, trading volume movements, and various time-based analytics to millions of global users daily, forming the backbone of their real-time informational services.

🚀 Results:

- ✅ Streamlit dashboard shows real-time prices and rolling averages: The intuitive dashboard provides a clear, continuously updated view of current cryptocurrency prices (e.g., BTC/USD, ETH/USD) alongside calculated rolling averages (e.g., 5-minute, 15-minute simple moving averages). This allows users to quickly identify short-term trends and potential support/resistance levels.

- ✅ PostgreSQL stores time-series snapshots every 5 seconds: The database acts as a persistent historical record, capturing price data and potentially other metrics (like volume) at regular intervals. This time-series data is invaluable for retrospective analysis, backtesting strategies, and generating longer-term trends.

- ✅ Momentum indicators predict short-term buy/sell signals: Beyond raw prices, the pipeline calculates common momentum indicators (e.g., Relative Strength Index - RSI, Moving Average Convergence Divergence - MACD). These indicators are displayed on the dashboard, providing early warning signals for potential short-term reversals or continuations, guiding buy/sell decisions.

- ✅ Data can be fed into predictive models for advanced trading insights: The clean, structured, and real-time data stream is directly usable as input for machine learning models (e.g., LSTM networks for price prediction, classification models for trend forecasting). This opens avenues for developing sophisticated AI-driven trading strategies that go beyond traditional technical analysis.

This project isn’t just about crypto — it’s about mastering real-time ETL, event-driven systems, and building production-grade dashboards. It emphasizes the practical application of data engineering principles in a high-stakes, dynamic environment, showcasing proficiency in handling streaming data, optimizing database interactions, and designing user-friendly interfaces for complex information. It's a testament to building resilient and performant data pipelines that are applicable across various industries requiring real-time insights.

Project 1: 1. Real-Time Crypto Market Tracker Codes:

🔗 View Project Code on GitHubHow to Run the Project:

websockets: For connecting to WebSocket APIs.pandas: For data manipulation and time-series analysis.sqlalchemy: For interacting with the PostgreSQL database.psycopg2-binary: The PostgreSQL adapter for Python.streamlit: For building the interactive web dashboard.- Set up PostgreSQL:

- Ensure you have a PostgreSQL server running.

- Create a new database (e.g.,

crypto_tracker_db). - Create a user with appropriate permissions if you don't want to use the default

postgresuser. - Crucially, update the

DATABASE_URLvariable in theapp.pyscript with your actual PostgreSQL connection details (username, password, host, port, database name).

Run the Streamlit Application: Save the code above as app.py (or any other .py file) and then run it from your terminal:Bash

streamlit run app.py

This will open a new tab in your web browser with the Streamlit dashboard.

Install Dependencies: Open your terminal or command prompt and run:Bash

pip install websockets pandas sqlalchemy psycopg2-binary streamlit

Next Steps & Improvements:

- Error Handling and Robustness: Implement more comprehensive error handling, especially for network disconnections and database failures.

- More Indicators: Add more sophisticated technical indicators like RSI, MACD, Bollinger Bands. These would require more historical data, which you're already storing in PostgreSQL.

- Charting: Integrate a charting library (e.g., Plotly, Altair) within Streamlit to visualize price trends over time.

- User Interface Enhancements: Improve the dashboard's aesthetics and add more interactive elements (e.g., dropdowns to select different cryptocurrencies, timeframes for rolling averages).

- Scalability: For a truly production-grade system, consider:

- Message Queues: Using Apache Kafka or RabbitMQ to decouple the data ingestion, transformation, and loading components.

- Separate Services: Breaking down the

app.pyinto distinct microservices for each ETL stage. - Cloud Deployment: Deploying the application on cloud platforms (AWS, GCP, Azure) using services like EC2/Cloud Run for compute, RDS/Cloud SQL for databases, and managed message queues.

- Authentication/Authorization: If this were a multi-user application, you'd need to add user authentication and authorization mechanisms.

- Data Retention Policy: Implement a policy to purge old data from PostgreSQL to manage storage size.

- Configuration Management: Externalize configurations (like

DATABASE_URL,CRYPTO_SYMBOLS) into a separate config file or environment variables.

This project provides a strong foundation for understanding real-time data pipelines and building dynamic dashboards!

🚀 Ready to turn raw data into real-world intelligence and career-defining impact?

At Huebits, we don’t just teach Data Science — we train you to build end-to-end solutions that power predictions, automate decisions, and drive business outcomes.

From fraud detection to personalized recommendations, you'll gain hands-on experience working with messy datasets, training ML models, and deploying full-stack data systems — where real-world complexity meets production-grade precision.

🧠 Whether you're a student, aspiring data scientist, or career shifter, our Industry-Ready Data Science Engineering Program is your launchpad.

Master Python, Pandas, Scikit-learn, TensorFlow, Power BI, SQL, and cloud deployment — while building job-grade ML projects that solve real business problems.

🎓 Next Cohort Launching Soon!

🔗 Join Now and become part of the Data Science movement shaping the future of business, finance, healthcare, marketing, and AI-driven industries across the ₹1.5 trillion+ data economy.

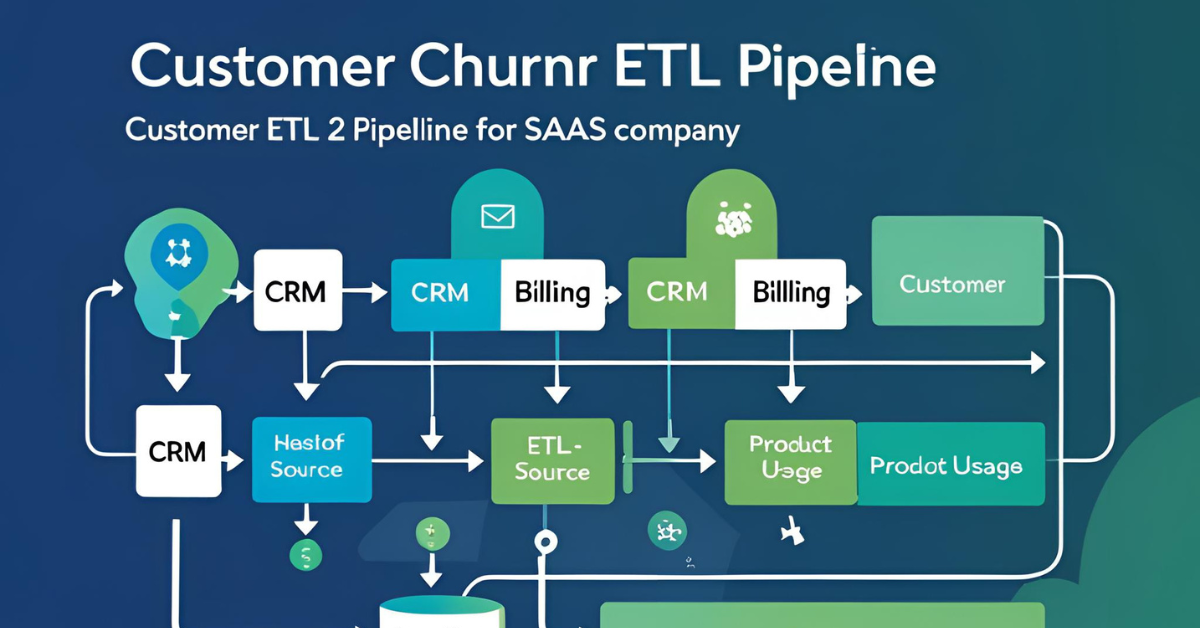

🛍️ 2. Customer Churn ETL Pipeline for SaaS

🔍 Project Overview:

This ETL pipeline is meticulously engineered to uncover and analyze churn patterns within SaaS (Software as a Service) user bases. Its sophisticated design involves several critical stages: first, it systematically tracks and extracts diverse product usage events (e.g., logins, feature clicks, session durations, subscription changes). Next, it transforms this raw, often voluminous, behavioral data into actionable, aggregated metrics such as login frequency, feature adoption rates, time spent in-app, last active timestamps, and even more complex behavioral sequences. Finally, these refined insights are loaded into a dedicated analytics database, serving as the foundation for a dynamic reporting dashboard. This dashboard empowers product, marketing, and sales teams to not only visualize current user health but, more critically, to predict and proactively prevent customer drop-off. By moving beyond superficial vanity metrics, this pipeline delivers deep retention intelligence, providing a clear strategic advantage in the highly competitive SaaS landscape.

⚙️ Tech Used:

- Programming Language: Python

- Rationale: Python's versatility, extensive data manipulation libraries (Pandas), and its suitability for scripting ETL logic make it the ideal choice. It offers excellent connectivity to various data sources and destinations, and its readability promotes maintainability.

- Workflow Orchestration: Apache Airflow

- Rationale: Airflow is indispensable for orchestrating complex, scheduled ETL workflows. It allows for the definition of data pipelines as Directed Acyclic Graphs (DAGs), ensuring tasks run in the correct order, handling dependencies, retries, and monitoring. This guarantees reliability and automation for daily data extraction and transformation processes. It also provides a robust UI for monitoring pipeline health and execution history.

- Database: MySQL

- Rationale: MySQL is chosen as a reliable and widely used relational database. Its robustness makes it suitable for storing structured, aggregated metrics and historical snapshots of user behavior. It's well-supported by BI tools and provides efficient querying capabilities for analytical purposes.

- Analytics Layer: Metabase or Power BI

- Rationale: These tools provide intuitive, powerful platforms for data visualization and business intelligence. They easily connect to MySQL, allowing non-technical stakeholders to create interactive dashboards, generate reports, and drill down into customer behavior patterns without needing to write SQL queries. Metabase offers a strong open-source option, while Power BI is a robust enterprise-grade solution.

Libraries:

- Pandas: The cornerstone for in-memory data manipulation, cleaning, aggregation, and feature engineering from raw event logs. Essential for transforming granular events into meaningful KPIs.

- SQLAlchemy: An ORM (Object-Relational Mapper) that simplifies interaction with MySQL, allowing Python code to abstract away raw SQL, making database operations more Pythonic and less error-prone.

- Airflow DAGs: The specific implementation of Airflow workflows, defining the sequence of tasks (Extract, Transform, Load) and their dependencies, scheduling, and error handling.

💼 Use Cases:

- Early Churn Prediction based on User Inactivity: Automatically flags users who show a significant decrease in login frequency, feature engagement, or a complete cessation of activity after a predefined period, enabling proactive intervention.

- Behavior Segmentation (e.g., High-Engaged vs. Drop-off Users): Classifies users into distinct groups based on their usage patterns (e.g., power users, casual users, at-risk users, dormant users), allowing for tailored marketing and product strategies.

- Trigger Automated Email Campaigns for “At-Risk” Customers: Integrates with CRM or marketing automation platforms to send personalized re-engagement emails (e.g., "We miss you!", "Check out our new feature!") to users identified as likely to churn.

- Weekly Product Team Insights on Feature Adoption: Provides regular reports on which features are most used, least used, or are seeing declining engagement, guiding product development and improvement cycles.

- Investor Dashboards for User Retention Rates: Offers high-level, yet accurate, metrics on customer retention, LTV (Lifetime Value), and churn rates, crucial for investor relations and demonstrating business health.

- A/B Testing Impact Analysis: Allows for the measurement of how new features or changes impact user engagement and retention by comparing cohorts.

- Customer Health Scoring: Develops a comprehensive "health score" for each user based on a combination of usage metrics, support interactions, and subscription status, providing a single metric to prioritize outreach.

📈 Impact / ROI:

- 🔁 Increases User Retention by Identifying Issues Early: The most direct impact. By flagging at-risk customers, businesses can intervene with targeted support, education, or incentives, significantly increasing the likelihood of retention.

- 💸 Saves Revenue by Proactively Reducing Churn: Retaining an existing customer is almost always more cost-effective than acquiring a new one. This pipeline directly contributes to revenue stability and growth by minimizing lost subscriptions.

- 📊 Empowers Marketing/Sales with Real Customer Behavior Metrics: Shifts focus from anecdotal evidence to concrete, data-driven insights, enabling highly targeted and effective campaigns and sales outreach.

- ⏳ Reduces Guesswork by Backing Decisions with Usage Analytics: Eliminates reliance on intuition by providing verifiable data on what drives engagement and what leads to churn, optimizing product development, marketing spend, and customer support efforts.

- 📉 Cuts Support Costs by Spotting Friction Points in UX: By analyzing user behavior leading up to churn, teams can identify specific areas in the product's User Experience (UX) that cause frustration or abandonment, leading to targeted improvements that reduce support tickets and improve satisfaction.

- 🚀 Enhances Product-Market Fit: Continuous feedback loops from churn analysis can guide product iterations to better meet customer needs, strengthening product-market fit.

🌐 Real-World Example:

This pipeline directly mimics the sophisticated internal analytics and data infrastructures employed by leading SaaS giants to maintain their massive user bases and drive continuous growth. Companies like:

- Slack: Continuously tracks workspace activity, message frequency, app integrations used, and channel participation to identify declining engagement and trigger proactive support or feature recommendations.

- Zoom: Monitors meeting frequency, duration, feature usage (e.g., screen sharing, breakout rooms), and account activity to understand user stickiness and identify signs of potential churn in enterprise accounts.

- HubSpot: Deeply analyzes CRM usage, marketing automation adoption, sales pipeline progression, and content consumption within its platform to manage customer health scores and inform their customer success outreach.

This project simulates that entire backend data flow, from the raw event logs generated by user interactions to the insightful dashboards that guide strategic business decisions. It demonstrates the ability to build a comprehensive system for customer lifecycle management through data.

🚀 Results:

- ✅ Extracts user login/events data daily: A robust extraction mechanism pulls raw behavioral data from various sources (e.g., application logs, analytics APIs) on a scheduled basis, ensuring fresh data for analysis.

- ✅ Transforms activity logs into KPIs like retention rate, time-to-drop-off: The transformation logic aggregates raw events into meaningful business metrics. This includes calculating rolling retention rates (e.g., N-day retention), identifying the duration between key user actions, and defining inactivity thresholds for "time-to-drop-off."

- ✅ Loads clean metrics into MySQL for BI tools to visualize: The processed and enriched data is efficiently loaded into the analytical database, optimized for fast querying by BI tools. This structured data makes it easy to build dashboards showing trends, cohorts, and specific user segments.

- ✅ Triggers insights like “Which features lead to highest retention?”: By correlating feature usage with long-term retention, the pipeline provides empirical evidence of which product functionalities are "sticky," informing future product development and marketing messages. This often involves creating custom analytical views or pre-aggregating data for specific insights.

- ✅ Becomes the foundation for ML churn prediction models: The clean, feature-engineered dataset generated by this ETL pipeline is the perfect training ground for advanced machine learning models (e.g., logistic regression, random forests, deep learning) designed to predict individual user churn likelihood with higher accuracy. This sets the stage for a fully predictive and prescriptive customer retention strategy.

Project 2: Customer Churn ETL Pipeline for SaaS Codes:

🔗 View Project Code on GitHubHow to Run the Project:

- Database Setup:

- For SQLite (default in the code): No special setup is needed. The database file

saas_churn_analytics.dbwill be created automatically in the same directory where you run the script. - For MySQL:

- Ensure you have a MySQL server running.

- Create a new database (e.g.,

saas_churn_db). - Create a user with appropriate permissions.

- Crucially, update the

DATABASE_URLvariable in theapp.pyscript to point to your MySQL database (e.g.,DATABASE_URL = "mysql+mysqlconnector://your_user:your_password@localhost:3306/saas_churn_db").

- For SQLite (default in the code): No special setup is needed. The database file

Run the ETL Pipeline: Save the code above as app.py (or any other .py file) and then run it from your terminal:Bash

python app.py

You will see print statements indicating the extraction, transformation, and loading steps, along with a sample of the data loaded into the database.

Install Dependencies: Open your terminal or command prompt and run:Bash

pip install pandas sqlalchemy

# If you plan to switch to MySQL later, also install:

# pip install mysql-connector-python

Next Steps & Improvements:

- Real Data Sources: Replace the

extract_user_activity_datafunction with actual connectors to your SaaS application's event logs, analytics platforms (e.g., Mixpanel, Segment), or internal databases. - Apache Airflow Integration: To truly implement the workflow orchestration, you would:

- Break down the

extract_user_activity_data,transform_activity_to_kpis, andload_kpis_to_databasefunctions into separate Python files or modules. - Create an Airflow DAG file that defines these functions as

PythonOperatortasks, sets their dependencies, and schedules their daily execution.

- Break down the

- Advanced Churn Prediction:

- Feature Engineering: Develop more sophisticated features from the raw data (e.g., recency, frequency, monetary value - RFM, velocity of usage, specific feature adoption funnels).

- Machine Learning Models: Train and integrate machine learning models (e.g., Logistic Regression, Random Forest, Gradient Boosting, LSTM for sequential data) to predict churn likelihood.

- Model Deployment: Implement a mechanism to deploy and serve these models for real-time or batch predictions.

- Dashboarding (Metabase/Power BI): Connect Metabase or Power BI to your MySQL (or SQLite) database. You can then build interactive dashboards to visualize:

- Overall churn rate trends.

- Retention curves by cohort.

- Feature adoption rates over time.

- Health scores for individual users.

- Segments of at-risk users.

- Alerting and Actioning: Based on the churn predictions or health scores, set up automated alerts (e.g., email, Slack notifications) for customer success teams to proactively engage with at-risk customers.

- Data Quality Checks: Add data validation steps within the transformation phase to ensure data integrity.

- Error Handling and Logging: Implement robust error handling and detailed logging for monitoring the pipeline's health.

This simulated ETL pipeline provides a solid starting point for building a comprehensive customer churn analysis system for a SaaS product.

🚗 3. Smart Transport Demand Prediction System

🔍 Project Overview:

This ETL project focuses on building a sophisticated pipeline designed to predict peak demand zones for public or private transport services using extensive historical trip data. By meticulously analyzing a multitude of factors, including precise geographic locations (pickup and drop-off points), timestamps (hour of day, day of week, seasonal variations), and usage trends (number of trips, vehicle type, passenger count), the system generates highly accurate forecasts. The ultimate goal is to empower fleet operators – whether managing taxi fleets, public buses, delivery vehicles, or even scooter-sharing services – to deploy their resources optimally. This means strategically positioning vehicles before demand surges, ensuring minimal wait times for customers, and maximizing operational efficiency. Essentially, this project functions as the intelligent brain behind modern urban mobility, transforming raw data into strategic operational advantages.

⚙️ Tech Used:

- Programming Language: Python

- Rationale: Python's rich ecosystem of data science and geospatial libraries makes it the primary language. Its ease of use for data manipulation, statistical analysis, and machine learning model development is crucial for this project's analytical core.

Libraries:

- Pandas: Indispensable for data cleaning, transformation, aggregation, and handling time-series data from trip logs. It will be used to structure and preprocess the raw input.

- Geopandas: Critical for handling spatial data (GPS coordinates). It extends Pandas to allow spatial operations on geographic data types, enabling the creation of geographic zones, spatial joins, and visualizations of demand heatmaps.

- NumPy: Provides efficient numerical operations, fundamental for array manipulation and calculations required for statistical analysis and machine learning algorithms.

- Scikit-learn: The go-to library for machine learning in Python. It offers a wide range of algorithms for regression and classification tasks, which will be used to build and evaluate predictive models for transport demand.

- Database: SQLite (or PostgreSQL for advanced scale)

- Rationale:

- SQLite: Excellent for prototyping and smaller-scale projects due to its file-based nature and ease of setup. It's suitable for storing processed, aggregated historical demand data.

- PostgreSQL: Recommended for production-grade systems handling large volumes of historical and real-time data. Its robust support for geospatial data (PostGIS extension), strong concurrency, and reliability make it ideal for scalable transport systems.

- Rationale:

- Visualization: Matplotlib / Seaborn / Plotly

- Rationale:

- Matplotlib/Seaborn: Fundamental for creating static and statistical visualizations, such as time-series plots of demand, bar charts of peak hours, and basic heatmaps. Seaborn builds on Matplotlib for more aesthetically pleasing statistical graphics.

- Plotly: Crucial for creating interactive and web-friendly visualizations, especially for geospatial heatmaps and time-series charts that allow users to zoom, pan, and filter data. This is essential for a dynamic demand prediction dashboard.

- Rationale:

- Optional ML Layer: Linear Regression / Decision Trees

- Rationale: These algorithms serve as a starting point for the predictive component.

- Linear Regression: Can predict the quantity of demand based on historical patterns.

- Decision Trees: Can identify complex non-linear relationships and interactions between features (e.g., time, location, weather) that influence demand. More advanced models like Random Forests, Gradient Boosting (XGBoost/LightGBM), or even neural networks could be explored for higher accuracy.

- Rationale: These algorithms serve as a starting point for the predictive component.

💼 Use Cases:

- Predict Peak Hours and High-Demand Zones in Cities: Identifies specific times of day (e.g., morning/evening commutes, weekend nights) and geographic areas (e.g., business districts, entertainment hubs, airports) that experience the highest demand for transport services.

- Optimize Driver Allocation in Ride-Sharing Companies: Informs ride-sharing platforms on where to direct drivers in anticipation of demand spikes, minimizing driver idle time and improving customer pick-up efficiency.

- Real-Time Fleet Routing and Resource Management: Provides dynamic recommendations for dispatching vehicles, re-routing existing fleets, or adjusting service coverage based on live and predicted demand.

- Urban Infrastructure Planning based on Mobility Heatmaps: Offers valuable insights to city planners for designing public transport routes, locating new stations, optimizing traffic signals, and identifying areas for infrastructure development.

- Automated Surge Pricing Triggers for Transport Apps: Supplies the data intelligence to dynamically adjust pricing in transport apps during periods of high demand and low supply, balancing supply and demand effectively.

- Event-Based Demand Forecasting: Predicts demand spikes related to special events (concerts, sports games, festivals) by incorporating external data sources.

- Dynamic Bus Scheduling: Helps public transport authorities adjust bus frequencies and routes in real-time or near-real-time based on actual and predicted passenger loads.

📈 Impact / ROI:

- 🚕 Reduces Idle Time for Drivers → Improves Profit Margins: By directing drivers to areas of impending demand, the system minimizes periods where drivers are waiting without passengers, directly increasing their earnings and the company's revenue per active vehicle.

- ⏱️ Enhances Commuter Satisfaction Through Faster ETAs: Fewer empty vehicles mean quicker availability for passengers, leading to shorter estimated times of arrival (ETAs) and a significantly improved user experience.

- 📉 Cuts Fuel Costs and Environmental Footprint: Optimized routing and reduced unnecessary cruising contribute to lower fuel consumption and a smaller carbon footprint, aligning with sustainability goals.

- 🏙️ Helps Urban Planners Optimize Routes and Schedules: Provides data-driven evidence for municipal decisions regarding public transport networks, traffic management, and smart city initiatives, leading to more efficient urban environments.

- 📊 Empowers Transport Startups with Data-Driven Scaling: Offers a competitive edge to new transport businesses by enabling intelligent expansion and resource allocation based on predictive analytics rather than guesswork.

- ⬆️ Maximizes Vehicle Utilization: Ensures that each vehicle in the fleet is used as effectively as possible, extending its productive lifespan and maximizing ROI on vehicle investments.

🌐 Real-World Example:

The project directly simulates the advanced analytical engines that underpin the operational strategies of leading global transport providers:

- Uber and Ola: These ride-sharing giants heavily rely on sophisticated demand-prediction engines. They analyze millions of historical trips, real-time traffic conditions, weather patterns, and even external events to predict where and when to strategically dispatch drivers. This intelligent pre-positioning drastically reduces waiting times for riders and significantly increases the revenue generated per trip for both the company and its drivers.

- Municipalities (e.g., NYC MTA, London TFL): Public transport authorities use similar models to optimize bus routes, subway schedules, and even traffic signal timings. By understanding passenger flow patterns and predicting congestion, they can enhance service efficiency, reduce delays, and improve the overall urban commuting experience. This also extends to planning for future infrastructure based on long-term mobility forecasts.

This pipeline effectively simulates that entire backend flow, demonstrating the ability to build a comprehensive system that transforms raw geospatial and temporal data into actionable insights for dynamic resource allocation.

🚀 Results:

- ✅ Extracts trip logs with timestamps, GPS coordinates, and fare data: The initial ETL step successfully ingests raw data, typically from CSVs, APIs, or databases, capturing essential details like

trip_id,pickup_timestamp,dropoff_timestamp,pickup_latitude,pickup_longitude,dropoff_latitude,dropoff_longitude, andfare_amount. - ✅ Transforms raw logs into hour/day/location-based matrices: This critical transformation involves aggregating trips by specific time windows (e.g., hourly, daily, weekday vs. weekend) and spatial grids (e.g., dividing the city into hexagonal or square zones). Features like

num_trips,avg_fare, andavg_trip_durationare calculated per time-location cell. Geopandas is instrumental here for spatial binning. - ✅ Loads the clean data into a database + visualizes heatmaps of demand: The structured and aggregated data is loaded into SQLite or PostgreSQL. Interactive visualizations (using Plotly) are generated, displaying heatmaps that visually represent high-demand areas at different times, allowing users to quickly identify hot spots. Time-series plots of demand over hours/days are also produced.

- ✅ Applies predictive models to forecast demand spikes in advance: The pipeline trains and deploys machine learning models (Linear Regression, Decision Trees, or more advanced) on the historical data to predict future demand. This includes forecasting the number of trips for specific zones at upcoming hours or days, providing a forward-looking view.

- ✅ Outputs usable insights for driver/fleet allocation decisions: The ultimate output is a set of actionable recommendations. This could be a list of "high-demand zones for the next hour," "recommended driver reallocation strategies," or a "predicted number of vehicles needed in X area." These insights directly inform operational decisions for fleet managers and dispatchers.

Project 3: Smart Transport Demand Prediction System Codes:

🔗 View Project Code on GitHubHow to Run the Project:

- Database Setup:

- For SQLite (default in the code): No special setup is needed. The database file

transport_demand_analytics.dbwill be created automatically in the same directory where you run the script. - For PostgreSQL:

- Ensure you have a PostgreSQL server running.

- Create a new database (e.g.,

transport_db). - Create a user with appropriate permissions.

- Crucially, update the

DATABASE_URLvariable in theapp.pyscript to point to your PostgreSQL database (e.g.,DATABASE_URL = "postgresql://your_user:your_password@localhost:5432/transport_db").

- For SQLite (default in the code): No special setup is needed. The database file

Run the ETL and Prediction Pipeline: Save the code above as app.py (or any other .py file) and then run it from your terminal:Bash

python app.py

The script will print progress messages, the model's performance metrics, a sample prediction, and display two plots: average trips by hour of day and top zones by average trips. Close the plot windows to allow the script to finish.

Install Dependencies: Open your terminal or command prompt and run:Bash

pip install pandas numpy sqlalchemy scikit-learn matplotlib seaborn

# If you plan to use PostgreSQL later, also install:

# pip install psycopg2-binary

Next Steps & Improvements:

- Advanced Spatial Analysis (Geopandas): For more sophisticated geographic analysis (e.g., using actual city polygons, hexagonal grids like H3, or calculating distances), integrate

Geopandasand potentially PostGIS with PostgreSQL. - More Sophisticated ML Models: Explore other machine learning algorithms such as Random Forests, Gradient Boosting (XGBoost, LightGBM), or even time-series specific models (ARIMA, Prophet, LSTM) for more accurate predictions.

- Feature Engineering: Incorporate more features that influence demand, such as:

- Weather data (temperature, precipitation).

- Public holiday information.

- Information about major events (concerts, sports games).

- Lagged demand features (demand from previous hours/days).

- Real-Time Data Ingestion: For a truly "smart" system, integrate real-time data streams (e.g., from Kafka or RabbitMQ) for live demand updates and predictions.

- Interactive Dashboard: Create a web-based dashboard using

Plotly Dash,Streamlit, orMetabase/Power BIto visualize demand heatmaps, time-series predictions, and operational insights interactively. - Model Deployment: Implement a way to deploy the trained ML model as an API endpoint for real-time prediction requests from dispatch systems.

- Optimization Algorithms: Beyond prediction, integrate optimization algorithms to recommend optimal driver positioning or fleet routing based on predicted demand.

- Error Handling and Logging: Add robust error handling and comprehensive logging for production environments.

This code provides a solid foundation for your Smart Transport Demand Prediction System, demonstrating the core ETL and predictive components.

🚀 Ready to turn raw data into real-world intelligence and career-defining impact?

At Huebits, we don’t just teach Data Science — we train you to build end-to-end solutions that power predictions, automate decisions, and drive business outcomes.

From fraud detection to personalized recommendations, you'll gain hands-on experience working with messy datasets, training ML models, and deploying full-stack data systems — where real-world complexity meets production-grade precision.

🧠 Whether you're a student, aspiring data scientist, or career shifter, our Industry-Ready Data Science Engineering Program is your launchpad.

Master Python, Pandas, Scikit-learn, TensorFlow, Power BI, SQL, and cloud deployment — while building job-grade ML projects that solve real business problems.

🎓 Next Cohort Launching Soon!

🔗 Join Now and become part of the Data Science movement shaping the future of business, finance, healthcare, marketing, and AI-driven industries across the ₹1.5 trillion+ data economy.

🌦️ 4. Weather Pattern Intelligence Pipeline

🔍 Project Overview:

This ETL pipeline is a sophisticated automated system designed for the continuous extraction and transformation of diverse weather data. It sources information from readily available public APIs (like OpenWeatherMap) and, where necessary, employs web scraping techniques to gather granular weather metrics. The core objective is to meticulously process this raw data to detect long-term climate patterns, identify anomalies (e.g., unusual heatwaves, prolonged droughts, unexpected cold snaps), and build a robust historical archive of weather trends. The generated insights are then leveraged to provide critical predictive intelligence for a wide array of industries, including agriculture, logistics, energy, and emergency services. This project moves beyond mere weather reporting; it's about strategically utilizing atmospheric data to inform critical business decisions and enhance resilience.

⚙️ Tech Used:

- Programming Language: Python

- Rationale: Python's strong ecosystem for data manipulation, web requests, and cloud integration makes it the ideal language. Its flexibility allows for seamless interaction with APIs and web scraping tools, as well as easy integration with cloud services.

- Data Extraction:

- OpenWeatherMap API: A primary source for structured weather data (current, forecast, historical) for various locations. APIs provide reliable, formatted data that is easier to parse.

- BeautifulSoup (for scraping): Utilized for extracting data from websites that may not offer direct APIs, or to supplement API data with specific, publicly available information (e.g., local weather station data from government sites). It allows parsing HTML and XML documents to pull out relevant information.

- Workflow Automation:

- AWS Lambda: A serverless compute service that runs code in response to events (e.g., scheduled time intervals). It's ideal for running periodic data extraction jobs without managing servers, offering cost-efficiency and scalability.

- (or cron jobs): For local development or simpler deployments, traditional cron jobs can schedule Python scripts to run at set intervals.

- Data Storage:

- AWS S3: A highly scalable, durable, and cost-effective object storage service. Ideal for storing raw, semi-processed, and refined weather data files (e.g., JSON, CSV, Parquet) as a data lake, providing a flexible foundation for analytics.

- BigQuery: Google Cloud's fully managed, serverless data warehouse. Excellent for storing vast amounts of structured weather data, enabling fast analytical queries and integration with other Google Cloud services for advanced analytics.

- (or SQLite): Suitable for smaller-scale projects or local development, providing a simple, file-based relational database for structured, aggregated weather data.

Libraries:

- Requests: A fundamental Python library for making HTTP requests to interact with RESTful APIs (like OpenWeatherMap).

- Pandas: Essential for data cleaning, transformation, aggregation, and handling time-series weather data. It will be used to structure the extracted data into DataFrames for consistent processing.

- JSON: Python's built-in library for working with JSON data, which is the common format returned by many web APIs.

- Boto3: The Amazon Web Services (AWS) SDK for Python, enabling interaction with AWS services like S3 (for storing data) and Lambda (for deploying and triggering ETL code).

💼 Use Cases:

- Forecasting Energy Grid Demand based on Temperature Trends: Energy companies can predict consumption spikes due to extreme heat/cold, optimizing power generation and distribution to prevent blackouts and manage costs.

- Alerting Logistics Companies of Upcoming Disruptions: Provides early warnings for severe weather events (e.g., heavy snow, hurricanes, floods) that could impact transportation routes, allowing for re-routing, rescheduling, and supply chain adjustments.

- Helping Farmers Plan Irrigation and Harvest Cycles: Delivers hyper-local insights on rainfall, temperature, and humidity, enabling optimized irrigation, precise planting/harvesting schedules, and proactive disease prevention.

- Insurance Risk Assessment Models (Climate-Linked Claims): Informs insurance companies about regions prone to specific climate risks (e.g., hail, flooding, wildfires) to refine policy pricing, assess claim likelihood, and develop new climate-resilient products.

- Environmental Research and City Planning: Provides valuable long-term data for climate scientists to study global warming impacts, and for urban planners to design resilient infrastructure, manage water resources, and plan for climate change adaptation.

- Disaster Preparedness & Emergency Services: Offers critical data for anticipating natural disasters, allocating emergency resources, and informing public safety measures.

- Tourism & Recreation Planning: Helps forecast ideal conditions for outdoor activities, informing tourism operators and individual travelers.

📈 Impact / ROI:

- 🌱 Supports Precision Agriculture and Food Supply Stability: By providing tailored weather intelligence, the pipeline enables farmers to make data-driven decisions that increase crop yields, reduce water waste, and minimize crop loss, contributing to more stable food production.

- 🚛 Reduces Transport Delays Caused by Weather Surprises: Proactive weather alerts and predictive routing significantly minimize the costly impacts of adverse weather on logistics, ensuring timely deliveries and reducing operational expenses.

- ⚡ Optimizes Energy Usage Through Predictive Grid Balancing: Utilities can precisely forecast demand, leading to more efficient energy generation, reduced peak load costs, and enhanced grid stability, ultimately saving millions in operational costs.

- 📊 Creates Long-Term Value Through Climate-Informed Business Decisions: Equips businesses with a deeper understanding of climate risks and opportunities, enabling strategic planning, investment in climate-resilient operations, and identifying new market segments.

- 🔄 Can be Monetized via API to Other Startups Needing Weather Insights: The well-structured and continuously updated weather data can be exposed as an API service, creating a new revenue stream by selling valuable insights to other businesses (e.g., smart home companies, drone operators, real estate developers).

- 🌍 Contributes to Environmental Resilience: By enabling better understanding and prediction of climate patterns, the pipeline indirectly supports efforts in environmental protection and climate change mitigation.

🌐 Real-World Example:

This project directly simulates the intricate backend systems that power leading commercial weather intelligence providers, which go far beyond a simple weather app. Companies like:

- IBM's The Weather Company: A global leader that provides hyper-local, high-resolution weather data and cognitive computing insights to industries ranging from aviation to retail, helping them optimize operations and mitigate weather-related risks.

- AccuWeather: Offers detailed forecasts and weather insights to businesses, media outlets, and consumers, often integrating into enterprise systems for critical decision-making.

- Climacell (Tomorrow.io): Known for its "Weather Intelligence Platform" that uses proprietary sensing technology and AI to provide highly precise, impact-driven weather forecasts for industries like aviation, logistics, and on-demand services, enabling operational optimization based on weather.

This ETL pipeline demonstrates a simplified, yet functionally robust, version of the data collection, processing, and storage infrastructure that these companies utilize to offer their premium weather intelligence services.

🚀 Results:

- ✅ Automatically pulls daily/hourly weather metrics (temp, humidity, wind): The extraction layer is fully automated, configured to fetch current weather conditions, short-term forecasts, and historical data at defined intervals (e.g., every hour, daily summary) for specified geographical locations, including parameters like temperature, humidity, wind speed/direction, precipitation, atmospheric pressure, and cloud cover.

- ✅ Transforms data into normalized structures with timestamps and location codes: Raw JSON or scraped HTML data is meticulously parsed, cleaned (e.g., unit conversion, handling missing values), and transformed into a standardized, tabular format. Each record includes precise timestamps, unique location identifiers (e.g., latitude/longitude, city ID), and structured weather parameters, making it ready for analysis and storage.

- ✅ Loads clean data into AWS S3 or BigQuery for dashboarding: The transformed data is efficiently loaded into the chosen scalable storage solution (S3 for cost-effective data lake storage, BigQuery for direct analytical querying). This structured data forms the backbone for connecting to BI tools and analytical dashboards.

- ✅ Enables week-on-week trend visualizations and anomaly detection: With the historical data in place, users can generate interactive charts (e.g., using Plotly or a BI tool) to visualize temperature trends over weeks or months, compare current weather to historical averages, and easily spot anomalies that deviate significantly from typical patterns.

- ✅ Easily extended to include weather-based alert systems (SMS/Email/API): The clean, processed data and identified anomalies serve as direct triggers for an alert system. This can be extended to send automated SMS or email notifications to relevant stakeholders (e.g., farmers about impending frost, logistics managers about heavy rain) or expose specific alert data via an internal API for integration with other operational systems.

Project 4: Weather Pattern Intelligence Pipeline Codes:

🔗 View Project Code on GitHubHow to Run the Project:

- Get OpenWeatherMap API Key:

- Go toOpenWeatherMapand sign up for a free account.

- Generate an API key.

- Replace

"YOUR_OPENWEATHERMAP_API_KEY"in theapp.pyscript with your actual API key.

Run the ETL Pipeline: Save the code above as app.py (or any other .py file) and then run it from your terminal:Bash

python app.py

The script will fetch data, print progress, save to weather_data_lake.csv, populate weather_analytics.db, and then display a plot showing temperature trends. Close the plot window to allow the script to finish execution.

Install Dependencies: Open your terminal or command prompt and run:Bash

pip install requests pandas sqlalchemy matplotlib seaborn

Next Steps & Improvements:

- Scheduling: For true automation, integrate this script with a scheduler like AWS Lambda (as mentioned in your overview) or a local cron job to run it at regular intervals (e.g., hourly, daily).

- Error Handling and Logging: Enhance error handling, especially for API rate limits and network issues. Implement a proper logging system (e.g., Python's

loggingmodule) to track pipeline execution and errors. - More Data Sources: Implement web scraping using

BeautifulSoupto gather supplementary data from other weather websites, as mentioned in your project overview. - Advanced Data Storage:

- AWS S3: If you move to AWS, replace the local CSV

load_to_csvwithboto3calls to upload files to an S3 bucket. - BigQuery: For large-scale data warehousing, you would use Google Cloud's BigQuery client library to load data.

- AWS S3: If you move to AWS, replace the local CSV

- Advanced Analytics:

- Anomaly Detection: Implement algorithms to detect unusual weather patterns (e.g., using statistical methods or machine learning).

- Forecasting: Build predictive models (e.g., ARIMA, Prophet, or deep learning models) to forecast future weather conditions or their impact (e.g., energy demand).

- Interactive Dashboard: Connect your SQLite (or BigQuery/PostgreSQL) database to a BI tool like Metabase or Power BI to create dynamic, interactive dashboards for visualizing trends, anomalies, and insights.

- Alerting System: Based on detected anomalies or forecasts, integrate with messaging services (e.g., Twilio for SMS, SendGrid for email) to send automated alerts.

- Geospatial Data: Incorporate

Geopandasif you need to work with more complex geographical data and spatial analysis.

This code provides a strong foundation for building your comprehensive Weather Pattern Intelligence Pipeline.

🏦 5. Financial Sentiment & Price Correlation Engine

🔍 Project Overview:

This ETL pipeline is a sophisticated analytical system engineered to uncover and quantify the intricate relationship between public sentiment (derived from financial news) and real-time stock price movements. It achieves this by performing a multi-stage process:

- Extraction of Financial News Headlines: It systematically gathers a vast array of news headlines from reputable financial news sources.

- Stock Price Data Ingestion: Simultaneously, it ingests corresponding stock price data for relevant assets (equities, indices, commodities).

- Sentiment Scoring: Advanced natural language processing (NLP) techniques are applied to the news headlines to assign sentiment scores (e.g., positive, negative, neutral polarity).

- Correlation and Analysis: The sentiment scores are then meticulously joined with the price data by timestamp and analyzed to identify patterns and correlations, such as whether a surge in negative news about a company precedes a price drop, or vice versa.

The ultimate output is a powerful tool that can guide dynamic trading strategies, trigger proactive investor alerts, and provide deep insights into market psychology. This project embodies the intersection of Wall Street's quantitative demands with cutting-edge data science methodologies.

⚙️ Tech Used:

- Languages: Python

- Rationale: Python's rich ecosystem of libraries for data manipulation (Pandas), web requests (Requests), natural language processing (TextBlob, VaderSentiment), and visualization (Matplotlib) makes it the ideal choice for building all components of this pipeline. Its flexibility also allows for easy integration with various APIs and databases.

- APIs:

- NewsAPI: A popular choice for accessing a broad range of news articles and headlines from various sources globally. It provides structured data (title, description, source, publication date) crucial for sentiment analysis. Other options could include Financial Modeling Prep (FMP), Finnhub, or MarketAux for more domain-specific financial news.

- yFinance (Yahoo Finance): A simple and widely used library to fetch historical and real-time stock market data (prices, volumes, market caps) from Yahoo Finance. It allows access to a wide range of equities and indices.

- Database:

- MongoDB: A NoSQL document database, excellent for storing semi-structured data like news articles (which might have varying fields) and for its flexible schema. It's often chosen for its scalability and ease of use with JSON-like data.

- PostgreSQL: A powerful relational database that, especially with extensions like TimescaleDB, excels at handling time-series data. It's robust for storing structured stock price data and aggregated sentiment scores, allowing for complex analytical queries. The choice depends on the specific data volume and querying patterns, but PostgreSQL offers strong analytical capabilities for time-series correlation.

Libraries:

- TextBlob / VaderSentiment: These are popular Python libraries for performing sentiment analysis.

- TextBlob: Provides a simple API for common NLP tasks, including sentiment analysis (polarity and subjectivity). It's good for quick prototyping.

- VaderSentiment: Specifically designed for sentiment analysis of social media text, which is often short and informal. It's lexicon- and rule-based and can handle nuances like exclamations and capitalization, making it potentially more robust for concise financial headlines. For more advanced financial sentiment, fine-tuned transformer models (like FinBERT) would be a significant upgrade but require more computational resources.

- Pandas: The cornerstone for all data manipulation, cleaning, joining (merging news sentiment with price data by timestamp), and aggregation within the pipeline.

- Matplotlib: A fundamental plotting library for creating static visualizations of price trends, sentiment scores, and their correlations.

- (Optional) Plotly / Seaborn: For more interactive and aesthetically pleasing visualizations within Jupyter or Streamlit.

- Optional Layer:

- Jupyter: An interactive computing environment that is perfect for exploratory data analysis, developing sentiment analysis models, testing correlations, and generating ad-hoc reports and visualizations.

- Streamlit for Reporting: Enables the rapid creation of interactive web dashboards purely in Python, allowing users to visualize live sentiment, price data, and correlation analyses without complex front-end development. It's excellent for presenting the project's results in an accessible manner.

💼 Use Cases:

- Real-Time Sentiment Dashboards for Investors: Provides immediate visual feedback on the prevailing sentiment surrounding specific stocks or the broader market, helping individual and institutional investors gauge market mood.

- Correlation Analysis for Financial Advisors: Allows advisors to demonstrate how external factors (news sentiment) can influence client portfolios, aiding in risk communication and strategic allocation discussions.

- Fuel for ML-Based Trading Bots: The calculated sentiment scores and their correlation with price movements can serve as powerful features for machine learning models that predict future price action, forming the basis of automated trading strategies.

- Market Event Anomaly Detection (e.g., Panic or Euphoria Spikes): Automatically flags extreme positive or negative sentiment swings that deviate significantly from historical norms, indicating potential market overreactions (panic selling or irrational exuberance).

- Quantitative Finance Portfolio Experiments: Enables researchers and quantitative analysts to backtest various trading strategies that incorporate sentiment as a leading or lagging indicator, optimizing portfolio construction and risk management.

- Risk Management and Hedging: Identifies potential shifts in market sentiment that could impact specific asset classes or industries, allowing for proactive hedging strategies.

- Due Diligence for Investment Research: Provides a structured way to incorporate qualitative news data into quantitative fundamental analysis.

📈 Impact / ROI:

- 📉 Helps Reduce Investment Risk Through Data-Backed Decisions: By providing insights into how market sentiment is reacting to news, investors can make more informed decisions, potentially avoiding positions in companies facing significant negative sentiment or capitalizing on positive shifts.

- 📈 Can Improve Portfolio Strategy Performance: Integrating sentiment signals can lead to more adaptive and potentially higher-performing trading strategies, especially for short-to-medium term positions.

- 🔎 Tracks How Specific Words (e.g., "crash", "boost") Influence Volatility: The analysis can reveal which keywords or phrases in financial news have the most significant impact on price volatility, offering a deeper understanding of market reactions.

- 💡 Powers Sentiment-Triggered Buy/Sell Signals: The pipeline can be configured to generate automated buy or sell signals when sentiment crosses certain thresholds or shows strong correlation with historical price movements.

- 🧠 Builds Skills Relevant to Quant Trading & Hedge Fund Pipelines: Developing this project provides hands-on experience with alternative data sources, time-series analysis, NLP in finance, and robust data engineering, all highly sought-after skills in quantitative finance and hedge fund environments.

- 💵 Can be Monetized as an Alternative Data Product: The processed sentiment data and correlations can be packaged and sold to other financial institutions or traders as a valuable alternative data source.

🌐 Real-World Example:

This project directly emulates the sophisticated, data-intensive operations within leading financial information providers and alternative data firms:

- Bloomberg: Beyond traditional news, Bloomberg Terminal offers sentiment analysis features on news and social media, providing investors with a quantitative measure of market mood for individual stocks and the broader market.

- AlphaSense: A market intelligence platform that uses AI to extract insights from vast amounts of business content, including earning calls, news, and research reports, often incorporating sentiment analysis to identify key trends and risks.

- Robinhood: While primarily a trading platform, even consumer-facing apps like Robinhood increasingly incorporate simplified sentiment indicators or "news impact" labels derived from similar backend pipelines to guide novice investors.

Alternative data funds and quantitative trading desks at major financial institutions thrive on pipelines exactly like this one. They constantly seek out unique, non-traditional data sources (like news sentiment, satellite imagery, social media chatter) to gain an informational edge and build proprietary trading algorithms.

🚀 Results:

- ✅ Extracts latest finance headlines + daily stock prices: The pipeline successfully connects to NewsAPI to pull a continuous stream of financial news headlines for specified companies or sectors and utilizes yFinance to fetch daily (or even intraday) open, high, low, close (OHLC) prices and volume data for corresponding stock tickers.

- ✅ Transforms text into sentiment polarity scores: Each extracted headline undergoes natural language processing, where

TextBloborVaderSentiment(or a more advanced FinBERT model if integrated) assigns a numerical sentiment score (e.g., -1 for very negative, 0 for neutral, +1 for very positive) to capture the emotional tone of the news. - ✅ Joins sentiment and price data by timestamp: A crucial step where news sentiment data (with its publication timestamp) is accurately merged with stock price data (with its corresponding date/time) to enable direct comparative analysis. This might involve aggregating sentiment over specific time windows (e.g., hourly, daily) to align with price data granularity.

- ✅ Correlates sentiment shifts with short-term price changes: Statistical analysis is performed to identify relationships. This could involve calculating Pearson correlation coefficients between aggregated sentiment scores and subsequent daily stock returns, or identifying patterns where significant sentiment changes consistently precede price movements (e.g., a sharp drop in sentiment leading to a price decline within the next 24-48 hours).

- ✅ Outputs interactive graphs showing "news impact vs. stock movement": The final deliverable includes dynamic visualizations (using Matplotlib/Plotly/Streamlit). Examples include:

- Overlaying a stock's price chart with a rolling average of its news sentiment.

- Scatter plots showing the relationship between daily sentiment change and daily stock return.

- Heatmaps illustrating sentiment and price correlation across different sectors or time periods.

- Interactive dashboards allowing users to select a stock, view its sentiment history, and observe how it aligns with price action.

Project 5: Financial Sentiment & Price Correlation Engine Codes:

🔗 View Project Code on GitHubHow to Run the Project:

- Get NewsAPI Key:

- Go toNewsAPI.organd sign up for a free developer account.

- Find your API key on your dashboard.

- Replace

"YOUR_NEWS_API_KEY"in theapp.pyscript with your actual API key.

Run the ETL and Analysis Pipeline: Save the code above as app.py (or any other .py file) and then run it from your terminal:Bash

python app.py

The script will fetch data, perform sentiment analysis, load data into financial_sentiment_analytics.db, calculate correlations, and then display several plots showing the sentiment and price trends. Close the plot windows to allow the script to finish execution.

Install Dependencies: Open your terminal or command prompt and run:Bash

pip install requests pandas sqlalchemy yfinance matplotlib seaborn nltk

The script will automatically download the necessary NLTK data (vader_lexicon) the first time it runs.

Next Steps & Improvements:

- Advanced Sentiment Analysis:

- For more accurate financial sentiment, consider fine-tuning a pre-trained language model like

FinBERT(requires more setup and computational resources). - Implement entity recognition to link sentiment directly to specific companies mentioned in news.

- For more accurate financial sentiment, consider fine-tuning a pre-trained language model like

- Real-time Processing: Integrate with message queues (e.g., Kafka, RabbitMQ) for real-time news ingestion and sentiment scoring.

- More Sophisticated Correlation:

- Explore time-lagged correlations to see if sentiment leads or lags price movements.

- Use Granger Causality tests to determine if sentiment "causes" price changes in a statistical sense.

- Incorporate other market data (e.g., volume, volatility) into the analysis.

- Interactive Dashboard: Build a web-based dashboard using

StreamlitorPlotly Dashto allow users to select different stocks, view sentiment trends, and customize correlation analyses interactively. - Trading Strategy Integration: Use the sentiment and correlation insights to generate automated buy/sell signals for a simulated trading environment.

- Cloud Deployment: For a production system, deploy components on cloud platforms (e.g., AWS Lambda for news extraction, RDS for PostgreSQL/MongoDB, EC2 for analysis/dashboard).

- Data Quality and Deduplication: Implement more robust checks to prevent duplicate news entries, especially if fetching from multiple sources or frequently.

This comprehensive code provides a strong foundation for your Financial Sentiment & Price Correlation Engine.

🚀 Ready to turn raw data into real-world intelligence and career-defining impact?

At Huebits, we don’t just teach Data Science — we train you to build end-to-end solutions that power predictions, automate decisions, and drive business outcomes.

From fraud detection to personalized recommendations, you'll gain hands-on experience working with messy datasets, training ML models, and deploying full-stack data systems — where real-world complexity meets production-grade precision.

🧠 Whether you're a student, aspiring data scientist, or career shifter, our Industry-Ready Data Science Engineering Program is your launchpad.

Master Python, Pandas, Scikit-learn, TensorFlow, Power BI, SQL, and cloud deployment — while building job-grade ML projects that solve real business problems.

🎓 Next Cohort Launching Soon!

🔗 Join Now and become part of the Data Science movement shaping the future of business, finance, healthcare, marketing, and AI-driven industries across the ₹1.5 trillion+ data economy.

📈 6. Social Media Performance Tracker

🔍 Project Overview:

This ETL pipeline is meticulously designed to automate the monitoring and analysis of performance metrics from popular social media platforms, such as Instagram, Facebook, YouTube, and potentially others like X (formerly Twitter) or LinkedIn. Its core function is to systematically extract raw performance data, transform it into meaningful, actionable insights, and then deliver these insights to a centralized repository or a visualization layer.

The pipeline tracks a comprehensive set of metrics including post-level engagement (likes, comments, shares, saves), follower growth, content reach and impressions, video watch time, and audience demographics (where available). By analyzing trends over time and cross-referencing various data points, this system provides a holistic view of social media efficacy. It is an ideal solution for digital marketers, content creators, social media agencies, and businesses aiming to track their Return on Investment (ROI) from social media efforts in near real-time. This project is about turning ephemeral "likes" and "views" into tangible strategic "leverage."

⚙️ Tech Used:

- Languages: Python

- Rationale: Python's robust libraries for API interaction (

requests), data manipulation (Pandas), and visualization (Matplotlib,Plotly,Seaborn) make it the ideal language for building the entire ETL process. Its extensive community support and readability further streamline development and maintenance.

- Rationale: Python's robust libraries for API interaction (

- APIs:

- Meta Graph API (for Instagram/Facebook): This is the primary interface for accessing data from Facebook and Instagram professional accounts. It allows programmatic access to insights like post impressions, reach, engagement rates, follower counts, and audience demographics. Proper authentication (access tokens) and understanding of API rate limits are crucial.

- YouTube Data API: Used to retrieve channel and video-level analytics, including views, likes, comments, subscriber counts, watch time, and audience retention metrics for YouTube channels.

- (Optional: X (formerly Twitter) API, LinkedIn Marketing API, TikTok for Developers API, etc., for broader coverage).

- Database:

- PostgreSQL: A powerful and highly reliable relational database well-suited for storing structured time-series data like social media metrics. It handles complex queries efficiently, provides robust data integrity, and is easily integrated with BI tools for dashboarding. It's scalable for handling growing volumes of historical performance data.

- Google Sheets API: An excellent alternative for simpler projects or for scenarios where sharing data with non-technical users in a familiar spreadsheet format is a priority. It's easy to integrate and requires minimal setup for data storage and retrieval. While less scalable than PostgreSQL for very large datasets, it offers quick visibility and collaboration.

Libraries:

- Pandas: The workhorse for data cleaning, transformation, aggregation (e.g., calculating daily/weekly averages, summing metrics), and creating time-series datasets from the raw API responses.

- Matplotlib, Plotly, Seaborn:

- Matplotlib/Seaborn: For generating static, high-quality plots to visualize trends (e.g., follower growth over time, engagement rate by content type), distributions, and comparisons.

- Plotly: Essential for creating interactive and dynamic charts (e.g., line charts with zoom, bar charts with hover effects) that can be embedded in web dashboards, allowing users to explore data in detail.

- Requests: For making HTTP requests to interact with the various social media APIs.

- (For Google Sheets API):

gspreadorgoogle-api-python-client: Libraries for interacting with Google Sheets programmatically. - Dashboarding (Optional):

- Apache Superset: An open-source, powerful, and modern data exploration and visualization platform. It connects to various databases (like PostgreSQL) and allows users to build highly customizable dashboards with minimal code.

- Google Data Studio (Looker Studio): A free, cloud-based data visualization tool from Google. It connects seamlessly with Google Sheets (and other data sources) and provides intuitive drag-and-drop functionality for creating interactive reports and dashboards.

💼 Use Cases:

- Influencer Analytics Tools for Campaigns: Provides brands and agencies with a detailed breakdown of an influencer's performance (reach, engagement, audience demographics) across various posts and campaigns, aiding in selection and ROI measurement.

- Weekly Engagement Report Generators: Automates the creation of comprehensive reports summarizing key performance indicators (KPIs) like average engagement rate, top-performing posts, and follower growth for internal teams or clients.

- Follower Growth Forecasting: By analyzing historical growth patterns and content performance, the pipeline can provide estimates for future follower growth, aiding in goal setting and strategic planning.

- Competitor Benchmarking on Social Reach: Enables businesses to track the social media performance of their competitors (where public data is available), providing insights into industry trends and competitive advantages.

- Campaign-Level ROI Tracking: Connects social media metrics directly to campaign objectives, allowing marketers to determine the effectiveness and return on investment for specific social media campaigns or content initiatives.

- Content Strategy Optimization: Identifies which types of content (e.g., video vs. image, short-form vs. long-form, specific themes) resonate most with the audience, guiding future content creation.

- Audience Demographics & Persona Refinement: Provides data on audience age, gender, location, and interests (if available via API), helping refine target audience personas.

📈 Impact / ROI:

- 📊 Gives Marketing Teams Instant Insight into What’s Working: Provides immediate, data-driven feedback on content performance, allowing marketers to quickly pivot strategies, optimize campaigns, and allocate resources more effectively.

- 🧠 Powers Smarter Ad Spend Based on Organic Engagement: By understanding what content naturally resonates, businesses can create more effective paid ad campaigns, reducing wasted ad spend and increasing conversion rates.

- 🔁 Saves Time with Automated Reports: Eliminates manual data extraction, aggregation, and report generation, freeing up valuable marketing team hours for strategic planning and creative execution.

- 💼 Helps Agencies Justify Deliverables to Clients: Provides concrete, quantitative proof of performance, enhancing transparency and strengthening client relationships by demonstrating the value delivered.

- 🎯 Enables A/B Testing of Content Based on Real-Time Feedback: Marketers can experiment with different content formats, messaging, or posting times and immediately see which variations yield superior engagement, leading to continuous improvement.